카이도스의 Tech Blog

PKOS(쿠버네티스)3주차 - Ingress & Storage 본문

실습 환경 배포 : 노드 c5d.large

해당 셋팅값으로 CloudFormation 배포 후 실습 진행.

c5d.large 의 EC2 인스턴스 스토어(임시 블록 스토리지), NVMe SSD

- 데이터 손실 : 기본 디스크 드라이브 오류, 인스턴스가 중지됨, 인스턴스가 최대 절전 모드로 전환됨, 인스턴스가 종료됨

# default NS 진입

kubectl ns default

Context "pjhtest.click" modified.

Active namespace is "default".

# 인스턴스 스토어 볼륨이 있는 c5 모든 타입의 스토리지 크기

aws ec2 describe-instance-types \

--filters "Name=instance-type,Values=c5*" "Name=instance-storage-supported,Values=true" \

--query "InstanceTypes[].[InstanceType, InstanceStorageInfo.TotalSizeInGB]" \

--output table

--------------------------

| DescribeInstanceTypes |

+---------------+--------+

| c5d.large | 50 |

| c5d.12xlarge | 1800 |

| c5d.24xlarge | 3600 |

| c5d.18xlarge | 1800 |

| c5d.2xlarge | 200 |

| c5d.4xlarge | 400 |

| c5d.xlarge | 100 |

| c5d.metal | 3600 |

| c5d.9xlarge | 900 |

+---------------+--------+

# 워커 노드 Public IP 확인

aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value}" --filters Name=instance-state-name,Values=running --output table

-------------------------------------------------------------------

| DescribeInstances |

+-----------------------------------------------+-----------------+

| InstanceName | PublicIPAdd |

+-----------------------------------------------+-----------------+

| nodes-ap-northeast-2c.pjhtest.click | 43.200.176.11 |

| master-ap-northeast-2a.masters.pjhtest.click | 43.201.84.6 |

| kops-ec2 | 13.124.181.28 |

| nodes-ap-northeast-2a.pjhtest.click | 3.38.209.90 |

+-----------------------------------------------+-----------------+

# 워커 노드 Public IP 변수 지정

W1PIP=<워커 노드 1 Public IP>

W2PIP=<워커 노드 2 Public IP>

W1PIP=3.38.209.90

W2PIP=43.200.176.11

echo "export W1PIP=$W1PIP" >> /etc/profile

echo "export W2PIP=$W2PIP" >> /etc/profile

# 워커 노드 스토리지 확인 : NVMe SSD 인스턴스 스토어 볼륨 확인

ssh -i ~/.ssh/id_rsa ubuntu@$W1PIP lsblk -e 7 -d

ssh -i ~/.ssh/id_rsa ubuntu@$W2PIP lsblk -e 7

ssh -i ~/.ssh/id_rsa ubuntu@$W1PIP df -hT -t ext4

ssh -i ~/.ssh/id_rsa ubuntu@$W2PIP df -hT -t ext4

ssh -i ~/.ssh/id_rsa ubuntu@$W1PIP lspci | grep Non-Volatile

00:04.0 Non-Volatile memory controller: Amazon.com, Inc. Device 8061

00:1f.0 Non-Volatile memory controller: Amazon.com, Inc. NVMe SSD Controller

# 파일시스템 생성 및 /data 마운트

ssh -i ~/.ssh/id_rsa ubuntu@$W1PIP sudo mkfs -t xfs /dev/nvme1n1

ssh -i ~/.ssh/id_rsa ubuntu@$W2PIP sudo mkfs -t xfs /dev/nvme1n1

ssh -i ~/.ssh/id_rsa ubuntu@$W1PIP sudo mkdir /data

ssh -i ~/.ssh/id_rsa ubuntu@$W2PIP sudo mkdir /data

ssh -i ~/.ssh/id_rsa ubuntu@$W1PIP sudo mount /dev/nvme1n1 /data

ssh -i ~/.ssh/id_rsa ubuntu@$W2PIP sudo mount /dev/nvme1n1 /data

ssh -i ~/.ssh/id_rsa ubuntu@$W1PIP df -hT -t ext4 -t xfs

ssh -i ~/.ssh/id_rsa ubuntu@$W2PIP df -hT -t ext4 -t xfs

Filesystem Type Size Used Avail Use% Mounted on

/dev/root ext4 124G 3.5G 121G 3% /

/dev/nvme1n1 xfs 47G 365M 47G 1% /data

Ingress

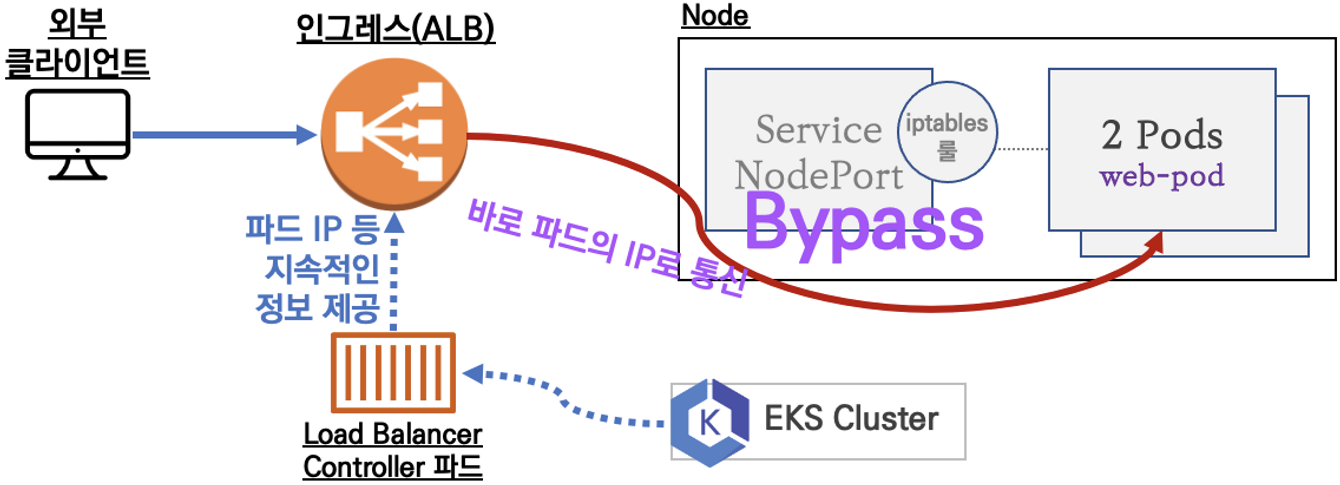

인그레스 소개 : 클러스터 내부의 서비스(ClusterIP, NodePort, Loadbalancer)를 외부로 노출(HTTP/HTTPS) - Web Proxy 역할

EC2 instance profiles 설정 및 AWS LoadBalancer 배포 & ExternalDNS 설치 및 배포

# 마스터/워커 노드에 EC2 IAM Role 에 Policy (AWSLoadBalancerControllerIAMPolicy) 추가

## IAM Policy 정책 생성 : 2주차에서 IAM Policy 를 미리 만들어두었으니 Skip

curl -o iam_policy.json https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.4.5/docs/install/iam_policy.json

aws iam create-policy --policy-name AWSLoadBalancerControllerIAMPolicy --policy-document file://iam_policy.json

# EC2 instance profiles 에 IAM Policy 추가(attach)

aws iam attach-role-policy --policy-arn arn:aws:iam::$ACCOUNT_ID:policy/AWSLoadBalancerControllerIAMPolicy --role-name masters.$KOPS_CLUSTER_NAME

aws iam attach-role-policy --policy-arn arn:aws:iam::$ACCOUNT_ID:policy/AWSLoadBalancerControllerIAMPolicy --role-name nodes.$KOPS_CLUSTER_NAME

# IAM Policy 정책 생성 : 2주차에서 IAM Policy 를 미리 만들어두었으니 Skip

curl -s -O https://s3.ap-northeast-2.amazonaws.com/cloudformation.cloudneta.net/AKOS/externaldns/externaldns-aws-r53-policy.json

aws iam create-policy --policy-name AllowExternalDNSUpdates --policy-document file://externaldns-aws-r53-policy.json

# EC2 instance profiles 에 IAM Policy 추가(attach)

aws iam attach-role-policy --policy-arn arn:aws:iam::$ACCOUNT_ID:policy/AllowExternalDNSUpdates --role-name masters.$KOPS_CLUSTER_NAME

aws iam attach-role-policy --policy-arn arn:aws:iam::$ACCOUNT_ID:policy/AllowExternalDNSUpdates --role-name nodes.$KOPS_CLUSTER_NAME

# kOps 클러스터 편집 : 아래 내용 추가

kops edit cluster

-----

spec:

certManager:

enabled: true

awsLoadBalancerController:

enabled: true

externalDns:

provider: external-dns

-----

# 업데이트 적용

kops update cluster --yes && echo && sleep 3 && kops rolling-update cluster

NAME STATUS NEEDUPDATE READY MIN TARGET MAX NODES

master-ap-northeast-2a Ready 0 1 1 1 1 1

nodes-ap-northeast-2a Ready 0 1 1 1 1 1

nodes-ap-northeast-2c Ready 0 1 1 1 1 1

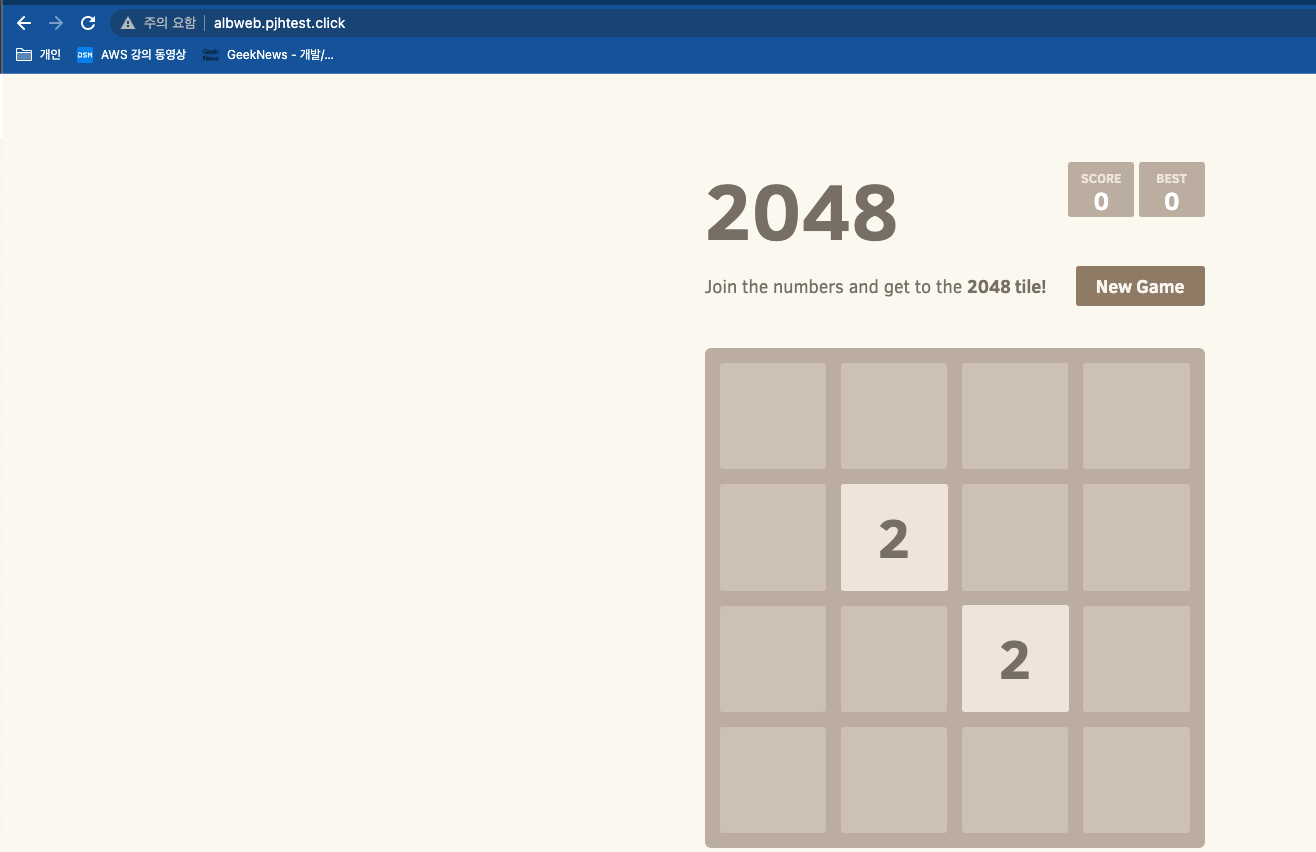

서비스/파드 배포 테스트 with Ingress(ALB)

# 게임 파드와 Service, Ingress 배포

cat ~/pkos/3/ingress1.yaml | yh

kubectl apply -f ~/pkos/3/ingress1.yaml

namespace/game-2048 created

deployment.apps/deployment-2048 created

service/service-2048 created

ingress.networking.k8s.io/ingress-2048 created

# 생성 확인

kubectl get-all -n game-2048

kubectl get ingress,svc,ep,pod -n game-2048

kubectl get targetgroupbindings -n game-2048

NAME SERVICE-NAME SERVICE-PORT TARGET-TYPE AGE

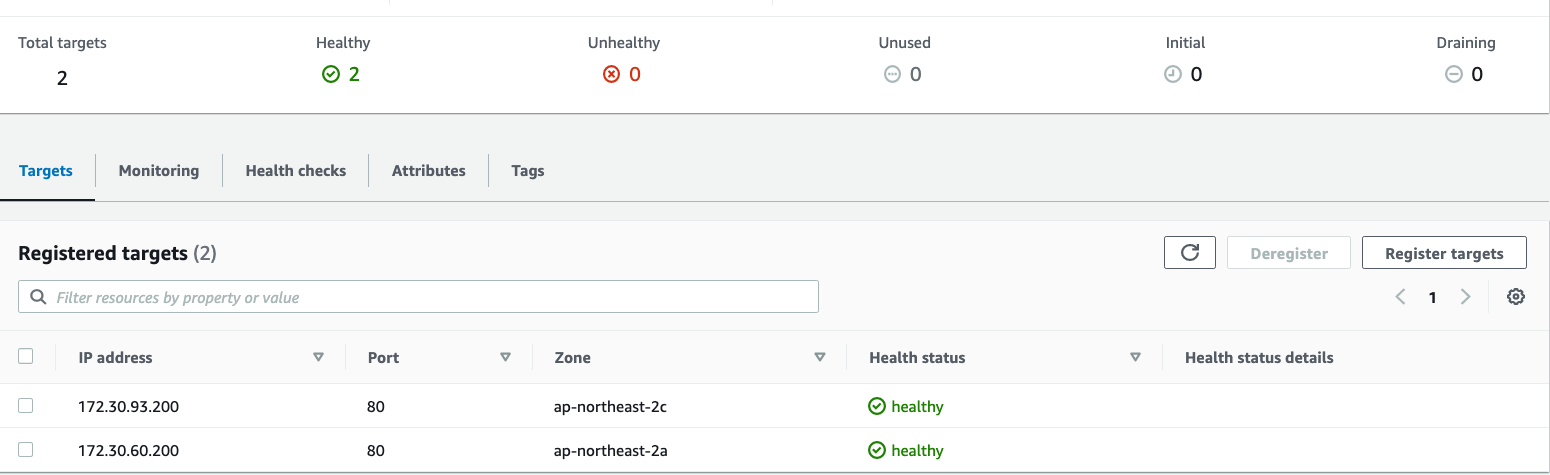

k8s-game2048-service2-ad0816e3ee service-2048 80 ip 21s

# Ingress 확인

kubectl describe ingress -n game-2048 ingress-2048

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfullyReconciled 39s ingress Successfully reconciled

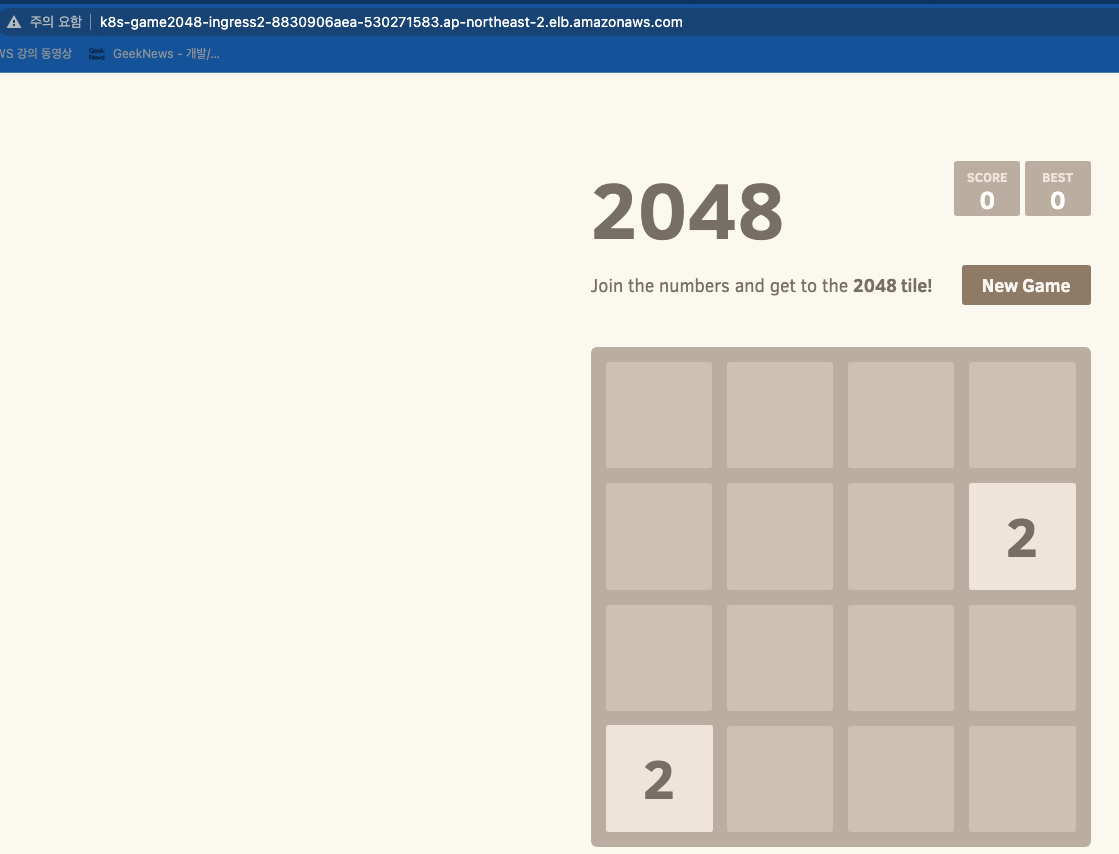

# 게임 접속 : ALB 주소로 웹 접속

kubectl get ingress -n game-2048 ingress-2048 -o jsonpath={.status.loadBalancer.ingress[0].hostname} | awk '{ print "Game URL = http://"$1 }'

Game URL = http://k8s-game2048-ingress2-8830906aea-530271583.ap-northeast-2.elb.amazonaws.com

# 파드 IP 확인

kubectl get pod -n game-2048 -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deployment-2048-6bc9fd6bf5-bg9qb 1/1 Running 0 2m7s 172.30.93.200 i-0458272df9432299c <none> <none>

deployment-2048-6bc9fd6bf5-qn25f 1/1 Running 0 2m7s 172.30.60.200 i-07f6b0abf6ee6c5ab <none> <none>

ALB 대상 그룹에 등록된 대상 확인 : ALB에서 파드 IP로 직접 전달

파드 3개로 증가

# 터미널1

watch kubectl get pod -n game-2048

# 터미널2 : 파드 3개로 증가

kubectl scale deployment -n game-2048 deployment-2048 --replicas 3

NAME READY STATUS RESTARTS AGE

deployment-2048-6bc9fd6bf5-bg9qb 1/1 Running 0 7m

deployment-2048-6bc9fd6bf5-qhfq7 1/1 Running 0 10s

deployment-2048-6bc9fd6bf5-qn25f 1/1 Running 0 7m

파드 1개로 감소

kubectl scale deployment -n game-2048 deployment-2048 --replicas 1

NAME READY STATUS RESTARTS AGE

deployment-2048-6bc9fd6bf5-qn25f 1/1 Running 0 7m31s

실습 리소스 삭제

kubectl delete ingress ingress-2048 -n game-2048

kubectl delete svc service-2048 -n game-2048 && kubectl delete deploy deployment-2048 -n game-2048 && kubectl delete ns game-2048

Ingress with ExternalDNS

# 변수 지정 - 자신의 full 도메인

WEBDOMAIN=<각자편한웹서버도메인>

WEBDOMAIN=albweb.pjhtest.click

# 게임 파드와 Service, Ingress 배포

cat ~/pkos/3/ingress2.yaml | yh

WEBDOMAIN=$WEBDOMAIN envsubst < ~/pkos/3/ingress2.yaml | kubectl apply -f -

# 확인

kubectl get ingress,svc,ep,pod -n game-2048

# AWS R53 적용 확인

dig +short $WEBDOMAIN

dig +short $WEBDOMAIN @8.8.8.8

# 로그 확인

kubectl logs -n kube-system -f $(kubectl get po -n kube-system | egrep -o 'external-dns[A-Za-z0-9-]+')

# 삭제

kubectl delete ingress ingress-2048 -n game-2048

kubectl delete svc service-2048 -n game-2048 && kubectl delete deploy deployment-2048 -n game-2048 && kubectl delete ns game-2048

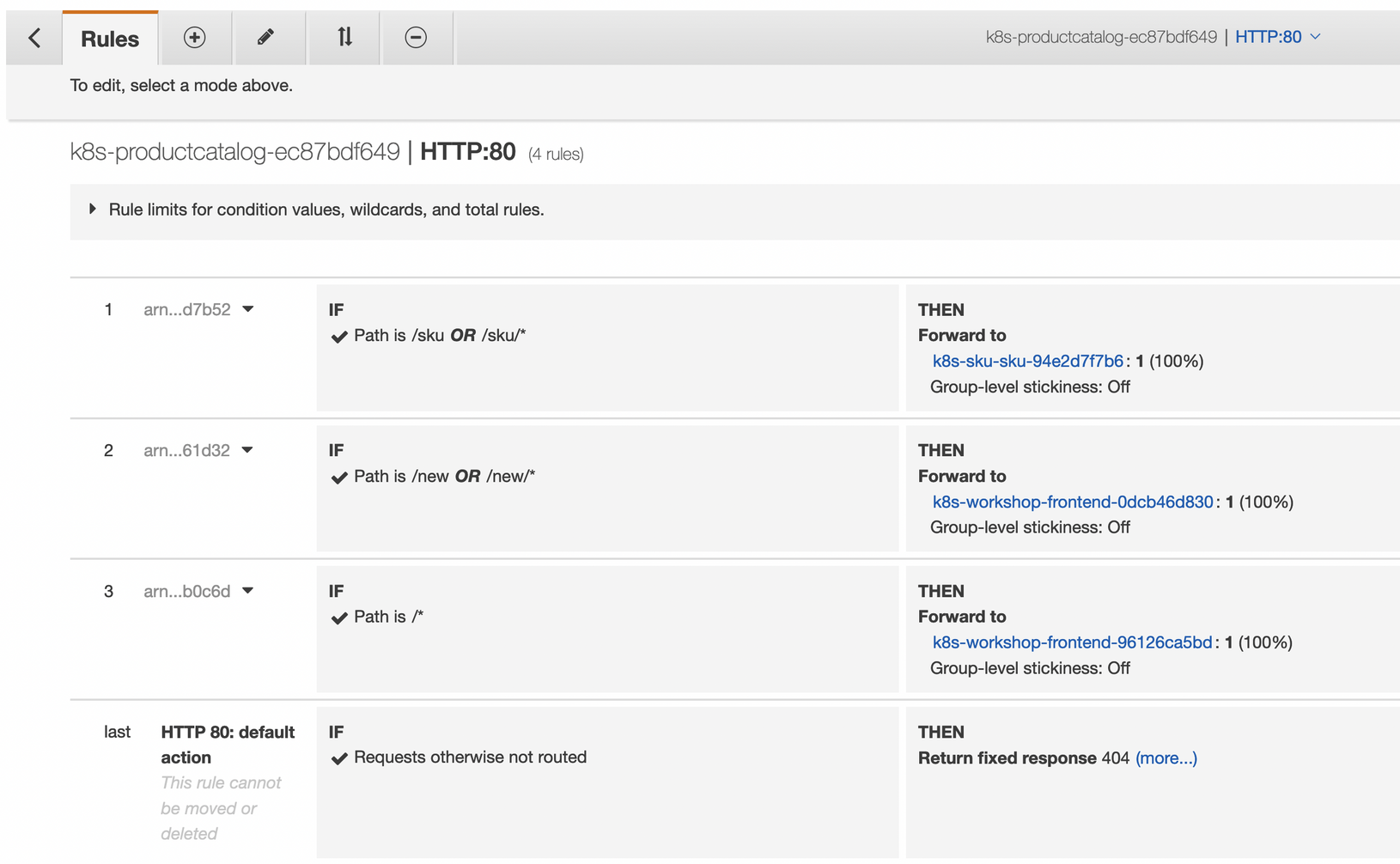

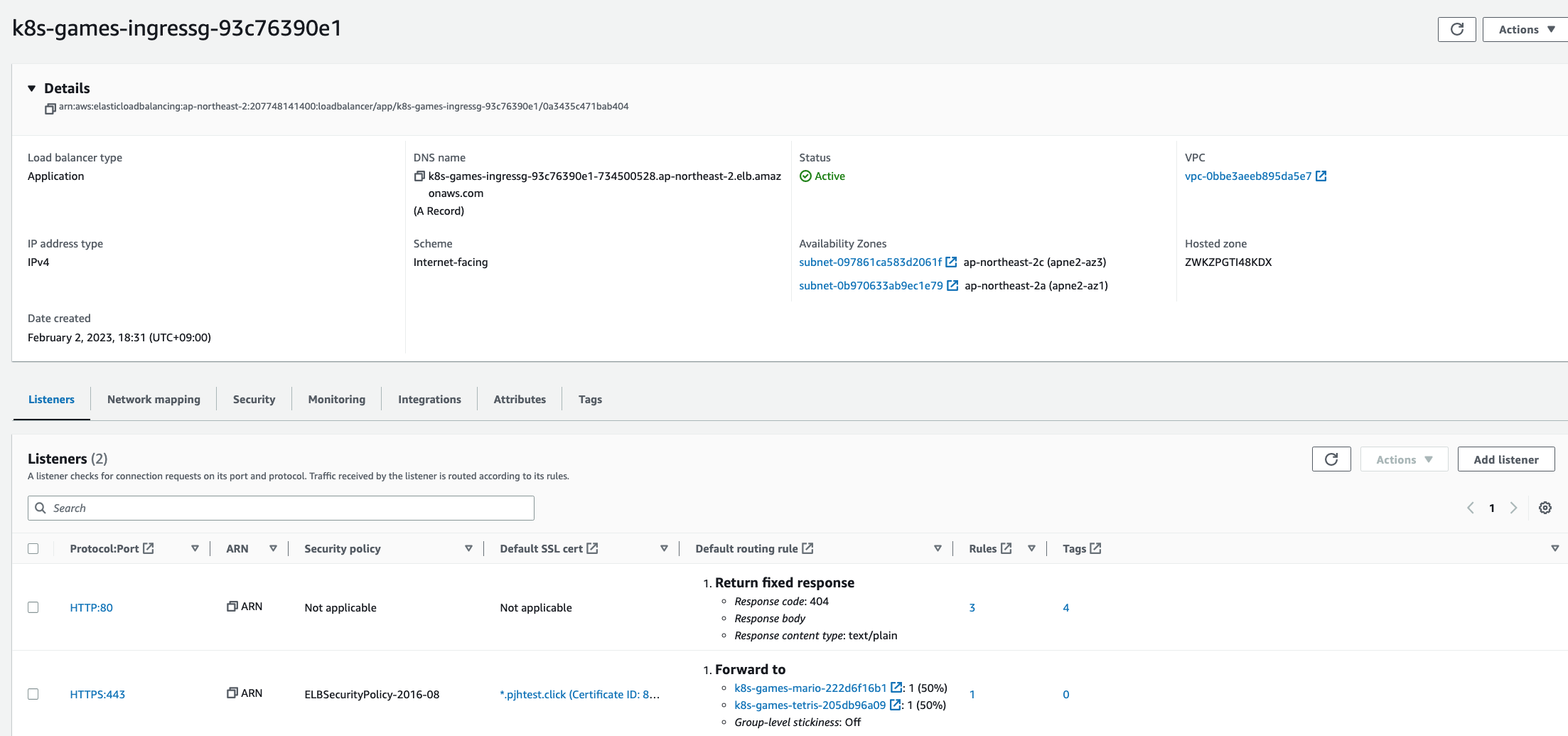

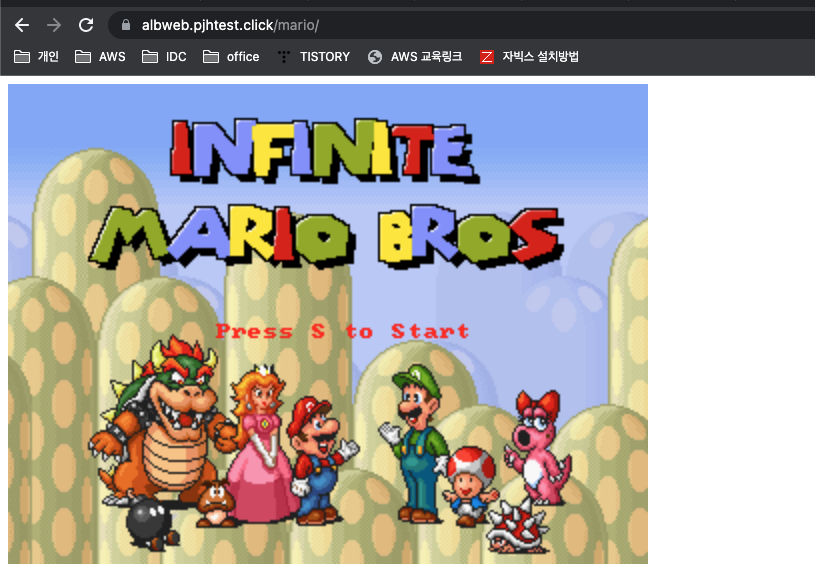

과제 1 ← 포기하고싶을만큼 너무 어렵고 힘들었다...

목표 : Ingress(with 도메인, 단일 ALB 사용)에 PATH /mario 는 mario 게임 접속하게 설정하고, /tetris 는 tetris 게임에 접속하게 설정하고, SSL 적용 후 관련 스샷 올려주세요

- 특히 ALB 규칙 Rules 를 상세히 보고, 의미를 이해해볼것

How To Expose Multiple Applications on Amazon EKS Using a Single Application Load Balancer | Amazon Web Services

Introduction Microservices architectures are default for cloud-native applications. Greater granular scalability, isolation of functions, and the possibility of having independent teams working on specific application functionalities are just some of the r

aws.amazon.com

https://catalog.workshops.aws/eks-immersionday/en-US/services-and-ingress/multi-ingress

Workshop Studio

catalog.workshops.aws

# 환경생성

cd ~/pkos/3/

cp ingress2.yaml ingressgames.yaml

vi ingressgames.yaml (아래내용으로 편집하여 진행함.)

apiVersion: v1

kind: Namespace

metadata:

name: games

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mario

namespace: games

labels:

app: mario

spec:

replicas: 1

selector:

matchLabels:

app: mario

template:

metadata:

labels:

app: mario

spec:

containers:

- name: mario

image: pengbai/docker-supermario

---

apiVersion: v1

kind: Service

metadata:

name: mario

namespace: games

lables:

annotations:

alb.ingress.kubernetes.io/healthcheck-path: /mario/index.html

spec:

selector:

app: mario

ports:

- port: 80

protocol: TCP

targetPort: 8080

type: NodePort

externalTrafficPolicy: Local

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: tetris

namespace: games

labels:

app: tetris

spec:

replicas: 1

selector:

matchLabels:

app: tetris

template:

metadata:

labels:

app: tetris

spec:

containers:

- name: tetris

image: bsord/tetris

---

apiVersion: v1

kind: Service

metadata:

name: tetris

namespace: games

annotations:

alb.ingress.kubernetes.io/healthcheck-path: /tetris/index.html

spec:

selector:

app: tetris

ports:

- port: 80

protocol: TCP

targetPort: 80

type: NodePort

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

namespace: games

name: ingress-games

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/healthcheck-protocol: HTTP

alb.ingress.kubernetes.io/healthcheck-port: traffic-port

alb.ingress.kubernetes.io/healthcheck-interval-seconds: '15'

alb.ingress.kubernetes.io/healthcheck-timeout-seconds: '5'

alb.ingress.kubernetes.io/success-codes: '200'

alb.ingress.kubernetes.io/healthy-threshold-count: '2'

alb.ingress.kubernetes.io/unhealthy-threshold-count: '2'

spec:

ingressClassName: alb

rules:

- host: ${WEBDOMAIN}

http:

paths:

- path: /mario

pathType: Prefix

backend:

service:

name: mario

port:

number: 80

- path: /tetris

pathType: Prefix

backend:

service:

name: tetris

port:

number: 80

# 변수 지정 - 자신의 full 도메인

WEBDOMAIN=<각자편한웹서버도메인>

WEBDOMAIN=albweb.pjhtest.click

# 게임 파드와 Service, Ingress 배포

WEBDOMAIN=$WEBDOMAIN envsubst < ~/pkos/3/ingressgames.yaml | kubectl apply -f -

# 애플리케이션 실행 디렉토리 변경

k exec -it $(kubectl get pod | grep mario | awk '{print $1}') -- bash -c "mkdir -p /usr/local/tomcat/webapps/ROOT/mario && mv /usr/local/tomcat/webapps/ROOT/* /usr/local/tomcat/webapps/ROOT/mario"

k exec -it $(kubectl get pod | grep tetris | awk '{print $1}') -- bash -c "mkdir -p /usr/share/nginx/html/tetris && mv /usr/share/nginx/html/* /usr/share/nginx/html/tetris"

# 확인

kubectl get ingress,svc,ep,pod -n games

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress.networking.k8s.io/ingress-games alb albweb.pjhtest.click k8s-games-ingressg-93c76390e1-734500528.ap-northeast-2.elb.amazonaws.com 80 41m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/mario NodePort 100.66.174.210 <none> 80:31538/TCP 41m

service/tetris NodePort 100.67.213.202 <none> 80:31901/TCP 41m

NAME ENDPOINTS AGE

endpoints/mario 172.30.34.123:8080 41m

endpoints/tetris 172.30.64.6:80 41m

NAME READY STATUS RESTARTS AGE

pod/mario-687bcfc9cc-cnm6g 1/1 Running 0 41m

pod/tetris-7f86b95884-txjz9 1/1 Running 0 41m

# 삭제

kubectl delete ingress ingress-games -n games

kubectl delete svc mario tetris -n games && kubectl delete ns games

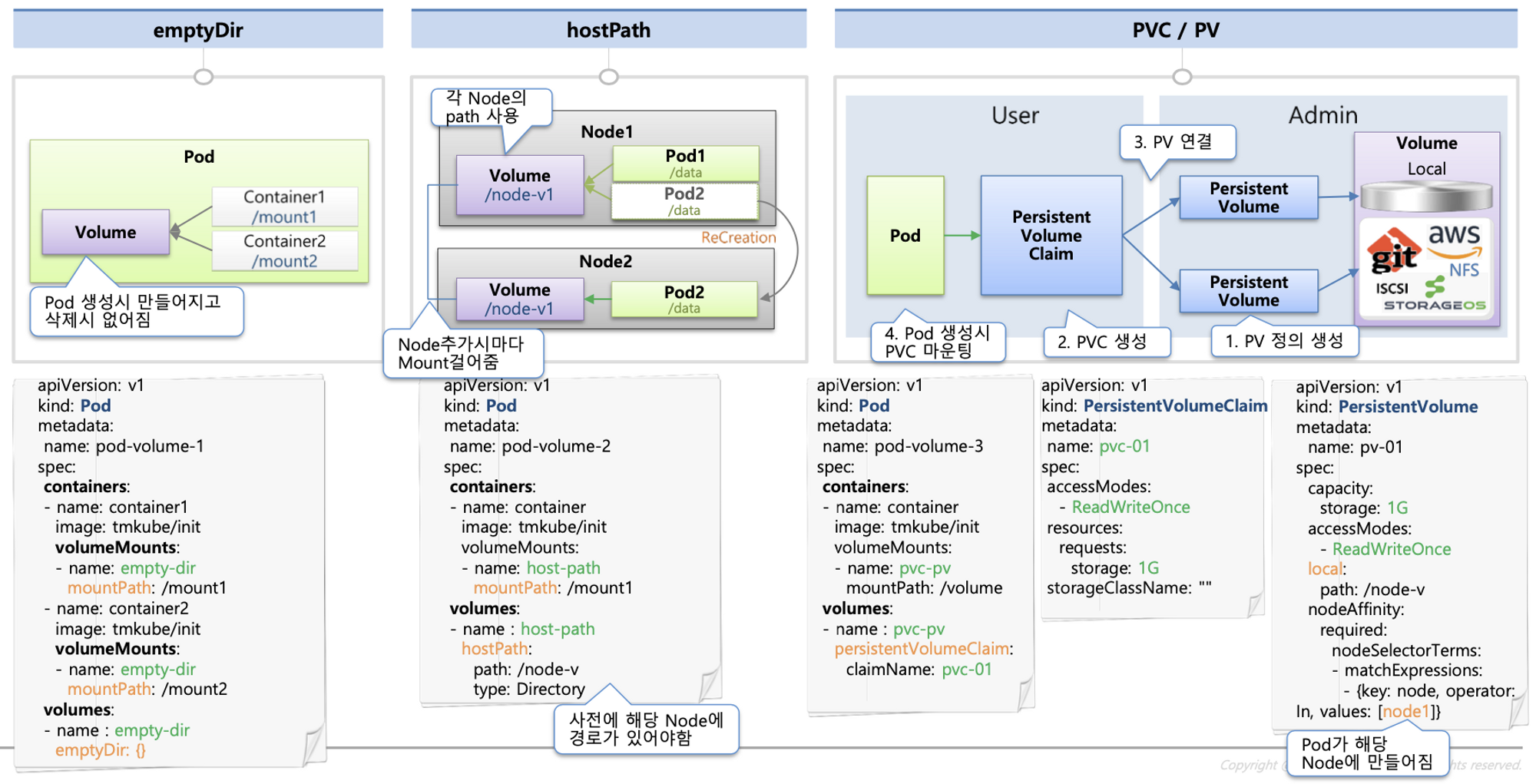

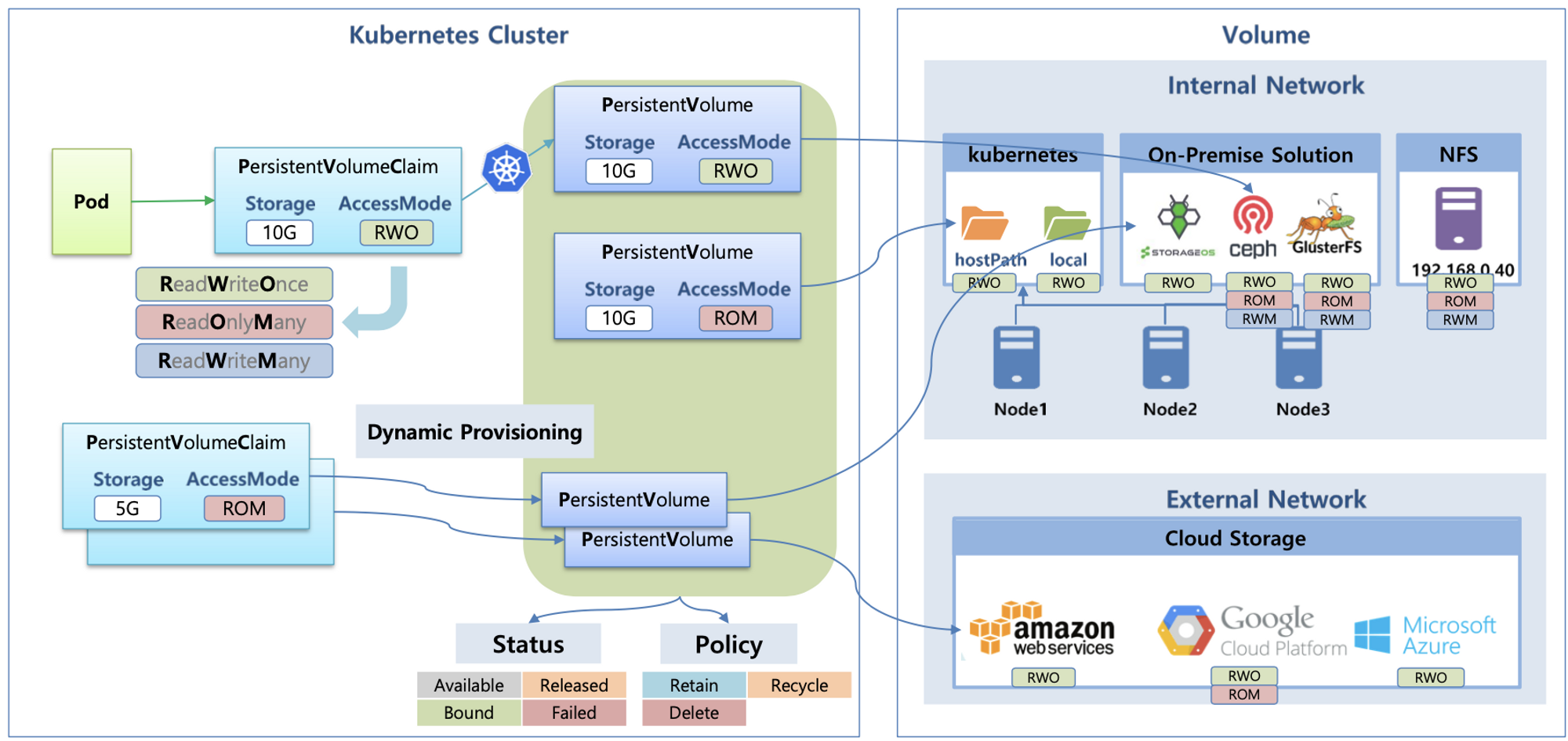

쿠버네티스 스토리지

- 파드 내부의 데이터는 파드가 정지되면 모두 삭제됨 → 즉, 파드가 모두 상태가 없는(Stateless) 애플리케이션이였음!

- 데이터베이스(파드)처럼 데이터 보존이 필요 == 상태가 있는(Stateful) 애플리케이션 → 로컬 볼륨(hostPath) ⇒ 퍼시스턴트 볼륨(Persistent Volume, PV) - 어느 노드에서도 연결하여 사용 가능, 예시) NFS, AWS EBS, Ceph 등

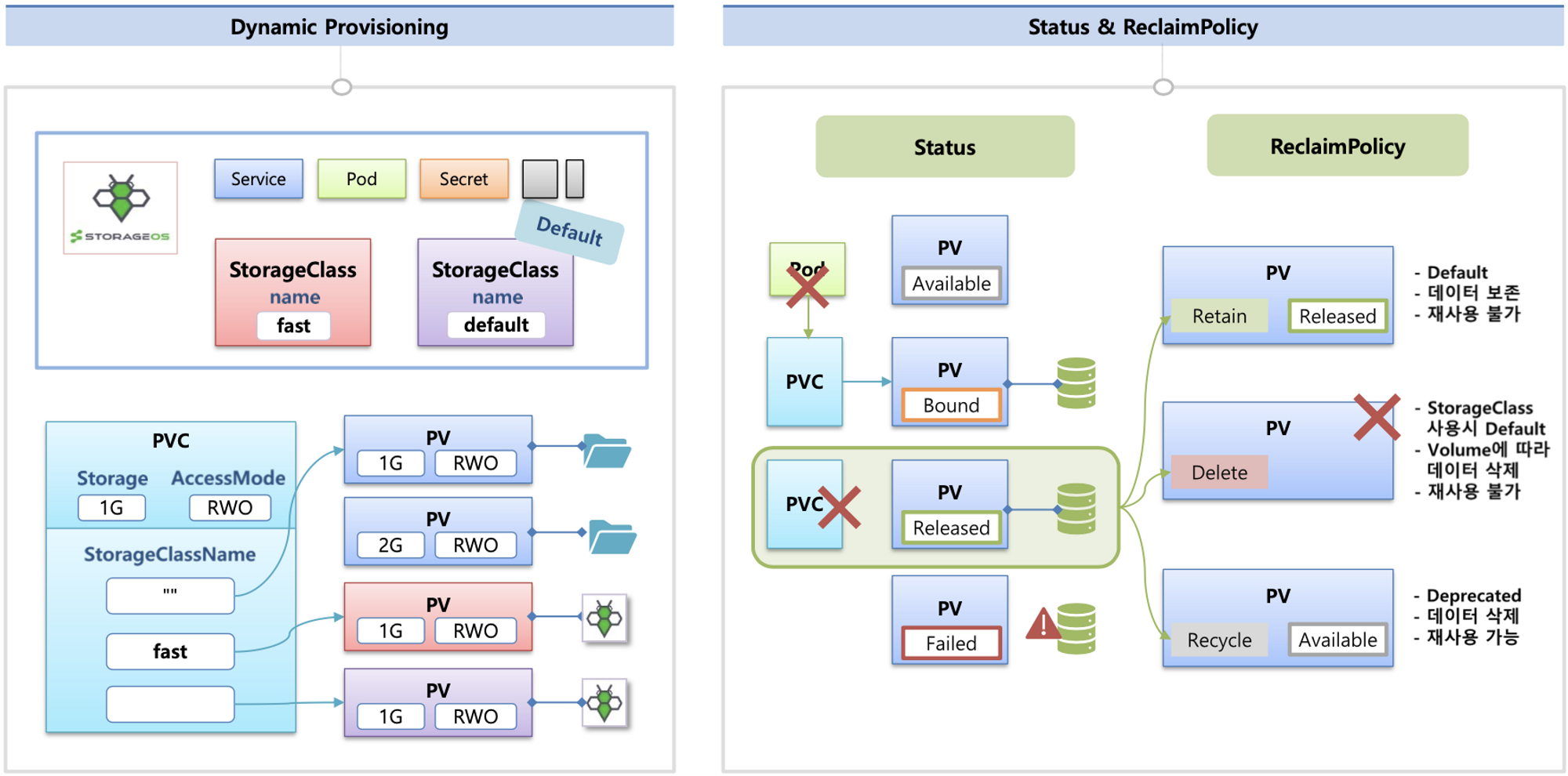

- 파드가 생성될 때 자동으로 볼륨을 마운트하여 파드에 연결하는 기능을 동적 프로비저닝(Dynamic Provisioning)이라고 함

- 퍼시스턴트 볼륨의 사용이 끝났을 때 해당 볼륨은 어떻게 초기화할 것인지 별도로 설정할 수 있는데, 쿠버네티스는 이를 Reclaim Policy 라고 부릅니다.

- Reclaim Policy 에는 크게 Retain(보존), Delete(삭제, 즉 EBS 볼륨도 삭제됨),

Recycle방식이 있습니다.

스토리지 소개 : 출처 - (🧝🏻♂️) 김태민 기술 블로그 - https://kubetm.github.io/k8s/03-beginner-basic-resource/volume/

볼륨 : emptyDir, hostPath, PV/PVC

다양한 볼륨 사용 : K8S 자체 제공(hostPath, local), 온프렘 솔루션(ceph 등), NFS, 클라우드 스토리지(AWS EBS 등)

동적 프로비저닝 & 볼륨 상태 , ReclaimPolicy

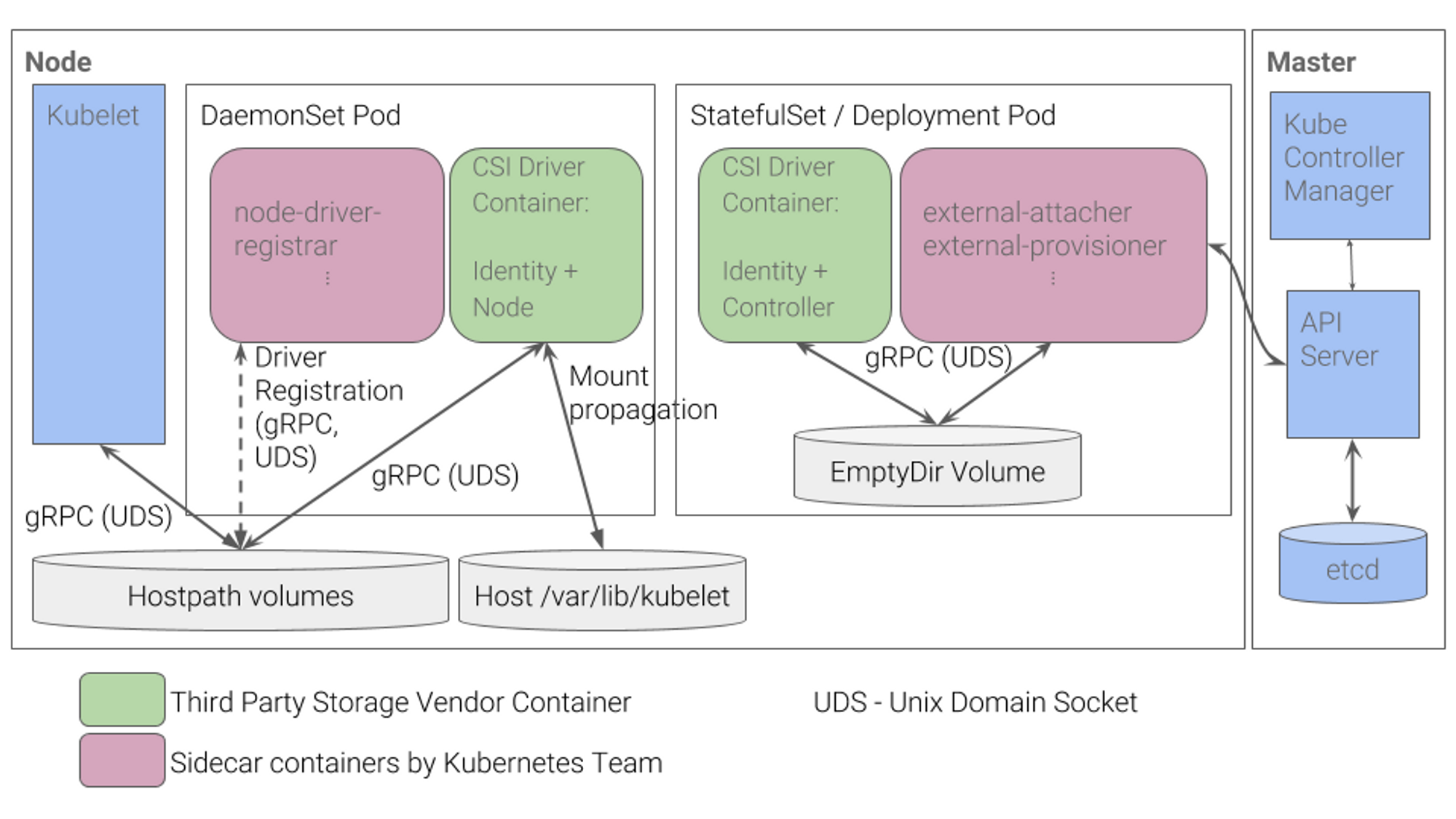

(옵션) CSI (Contaier Storage Interface) 소개

- CSI Driver 배경 : Kubernetes source code 내부에 존재하는 AWS EBS provisioner는 당연히 Kubernetes release lifecycle을 따라서 배포되므로, provisioner 신규 기능을 사용하기 위해서는 Kubernetes version을 업그레이드해야 하는 제약 사항이 있습니다. 따라서, Kubernetes 개발자는 Kubernetes 내부에 내장된 provisioner (in-tree)를 모두 삭제하고, 별도의 controller Pod을 통해 동적 provisioning을 사용할 수 있도록 만들었습니다. 이것이 바로 CSI (Container Storage Interface) driver 입니다

- CSI 를 사용하면, K8S 의 공통화된 CSI 인터페이스를 통해 다양한 프로바이더를 사용할 수 있다.

- 일반적인 CSI driver의 구조입니다. AWS EBS CSI driver 역시 아래와 같은 구조를 가지는데, 오른쪽 StatefulSet 또는 Deployment로 배포된 controller Pod이 AWS API를 사용하여 실제 EBS volume을 생성하는 역할을 합니다. 왼쪽 DaemonSet으로 배포된 node Pod은 AWS API를 사용하여 Kubernetes node (EC2 instance)에 EBS volume을 attach 해줍니다.

기본 컨테이너 환경의 임시 디스크 사용 : 책 p153

# 파드 배포

# date 명령어로 현재 시간을 10초 간격으로 /home/pod-out.txt 파일에 저장

cat ~/pkos/3/date-busybox-pod.yaml | yh

apiVersion: v1

kind: Pod

metadata:

name: busybox

spec:

terminationGracePeriodSeconds: 3

containers:

- name: busybox

image: busybox

command:

- "/bin/sh"

- "-c"

- "while true; do date >> /home/pod-out.txt; cd /home; sync; sync; sleep 10; done"

kubectl apply -f ~/pkos/3/date-busybox-pod.yaml

# 파일 확인

kubectl get pod

kubectl exec busybox -- tail -f /home/pod-out.txt

Fri Feb 3 04:01:45 UTC 2023

Fri Feb 3 04:01:55 UTC 2023

...

# 파드 삭제 후 다시 생성 후 파일 정보 확인 > 이전 기록이 보존되어 있는지?

kubectl delete pod busybox

kubectl apply -f ~/pkos/3/date-busybox-pod.yaml

kubectl exec busybox -- tail -f /home/pod-out.txt

# 실습 완료 후 삭제

kubectl delete pod busybox

호스트 Path 를 사용하는 PV/PVC : local-path-provisioner 스트리지 클래스 배포

# 마스터노드의 이름 확인해두기

kubectl get node | grep control-plane | awk '{print $1}'

i-0b1be5db780dedb96

# 배포 : vim 직접 편집할것

curl -s -O https://raw.githubusercontent.com/rancher/local-path-provisioner/v0.0.23/deploy/local-path-storage.yaml

vim local-path-storage.yaml

----------------------------

# 아래 빨간 부분은 추가 및 수정

apiVersion: apps/v1

kind: Deployment

metadata:

name: local-path-provisioner

namespace: local-path-storage

spec:

replicas: 1

selector:

matchLabels:

app: local-path-provisioner

template:

metadata:

labels:

app: local-path-provisioner

spec:

nodeSelector:

kubernetes.io/hostname: "<각자 자신의 마스터 노드 이름 입력>"

tolerations:

- effect: NoSchedule

key: node-role.kubernetes.io/control-plane

operator: Exists

...

kind: ConfigMap

apiVersion: v1

metadata:

name: local-path-config

namespace: local-path-storage

data:

config.json: |-

{

"nodePathMap":[

{

"node":"DEFAULT_PATH_FOR_NON_LISTED_NODES",

"paths":["/data/local-path"]

}

]

}

----------------------------

# 배포

kubectl apply -f local-path-storage.yaml

# 확인 : 마스터노드에 배포됨

kubectl get-all -n local-path-storage

kubectl get pod -n local-path-storage -owide

kubectl describe cm -n local-path-storage local-path-config

kubectl get sc local-path

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

local-path rancher.io/local-path Delete WaitForFirstConsumer false 34s

PV/PVC 를 사용하는 파드 생성

# PVC 생성

cat ~/pkos/3/localpath1.yaml | yh

kubectl apply -f ~/pkos/3/localpath1.yaml

# PVC 확인

kubectl get pvc

kubectl describe pvc

# 파드 생성

cat ~/pkos/3/localpath2.yaml | yh

kubectl apply -f ~/pkos/3/localpath2.yaml

# 파드 확인

kubectl get pod,pv,pvc

kubectl df-pv

kubectl exec -it app -- tail -f /data/out.txt

Fri Feb 3 06:11:33 UTC 2023

Fri Feb 3 06:11:38 UTC 2023

Fri Feb 3 06:11:43 UTC 2023

Fri Feb 3 06:11:48 UTC 2023

...

# 워커노드에 툴 설치

ssh -i ~/.ssh/id_rsa ubuntu@$W1PIP sudo apt install -y tree jq sysstat

ssh -i ~/.ssh/id_rsa ubuntu@$W2PIP sudo apt install -y tree jq sysstat

# 워커노드 중 현재 파드가 배포되어 있다만, 아래 경로에 out.txt 파일 존재 확인

ssh -i ~/.ssh/id_rsa ubuntu@$W1PIP tree /data

ssh -i ~/.ssh/id_rsa ubuntu@$W2PIP tree /data

/data

└── local-path

└── pvc-872084a3-cd70-4e2e-aea9-0dafba6e2f6c_default_localpath-claim

└── out.txt

2 directories, 1 file

# 해당 워커노드 자체에서 out.txt 파일 확인 : 아래 굵은 부분은 각자 실습 환경에 따라 다름

ssh -i ~/.ssh/id_rsa ubuntu@$W1PIP tail -f /data/local-path/pvc-872084a3-cd70-4e2e-aea9-0dafba6e2f6c_default_localpath-claim/out.txt

Fri Feb 3 06:13:53 UTC 2023

Fri Feb 3 06:13:58 UTC 2023

Fri Feb 3 06:14:03 UTC 2023

Fri Feb 3 06:14:08 UTC 2023

Fri Feb 3 06:14:13 UTC 2023

Fri Feb 3 06:14:18 UTC 2023

Fri Feb 3 06:14:23 UTC 2023

Fri Feb 3 06:14:28 UTC 2023

Fri Feb 3 06:14:33 UTC 2023

Fri Feb 3 06:14:38 UTC 2023

...

파드 삭제 후 파드 재생성해서 데이터 유지 되는지 확인

# 파드 삭제 후 PV/PVC 확인

kubectl delete pod app

kubectl get pod,pv,pvc

ssh -i ~/.ssh/id_rsa ubuntu@$W1PIP tree /data

ssh -i ~/.ssh/id_rsa ubuntu@$W2PIP tree /data

/data

└── local-path

└── pvc-872084a3-cd70-4e2e-aea9-0dafba6e2f6c_default_localpath-claim

└── out.txt

2 directories, 1 file

# 파드 다시 실행

kubectl apply -f ~/pkos/3/localpath2.yaml

# 확인

kubectl exec -it app -- head /data/out.txt

Fri Feb 3 06:11:33 UTC 2023

Fri Feb 3 06:11:38 UTC 2023

Fri Feb 3 06:11:43 UTC 2023

Fri Feb 3 06:11:48 UTC 2023

Fri Feb 3 06:11:53 UTC 2023

Fri Feb 3 06:11:58 UTC 2023

Fri Feb 3 06:12:03 UTC 2023

Fri Feb 3 06:12:08 UTC 2023

Fri Feb 3 06:12:13 UTC 2023

Fri Feb 3 06:12:18 UTC 2023

kubectl exec -it app -- tail -f /data/out.txt

Fri Feb 3 06:16:03 UTC 2023

Fri Feb 3 06:16:08 UTC 2023

Fri Feb 3 06:16:13 UTC 2023

Fri Feb 3 06:16:18 UTC 2023

Fri Feb 3 06:16:23 UTC 2023

Fri Feb 3 06:16:28 UTC 2023

Fri Feb 3 06:16:33 UTC 2023

Fri Feb 3 06:16:38 UTC 2023

Fri Feb 3 06:17:27 UTC 2023

Fri Feb 3 06:17:32 UTC 2023

다음 실습을 위해서 파드와 PVC 삭제

# 파드와 PVC 삭제

kubectl delete pod app

kubectl get pv,pvc

kubectl delete pvc localpath-claim

# 확인

kubectl get pv

ssh -i ~/.ssh/id_rsa ubuntu@$W1PIP tree /data

ssh -i ~/.ssh/id_rsa ubuntu@$W2PIP tree /data

/data

└── local-path

1 directory, 0 files

hostpath 형태의 데이터 저장소의 문제점 확인 : 강제로 디플로이먼트(파드)를 쫓아내서 문제 발생 후 확인 → 어떤 문제가 발생하는가? [책 171p 참고]

-현재 상태에서 해결 할 수 있는 방법(아이디어)가 있는가? 스토리지 복제 vs 애플리케이션 복제, 혹은 또 다른 아이디어 애플리케이션(파드) 간 복제가 된다면 로컬에 있는 저장소를 사용하는 것에 대해서 어떻게 생각하나요?

hostpath=Pod가 올라가있는 Node를 path로 사용하기때문에 생긴 명칭

emptyDir과 다르게 Pod가 죽어도 볼륨의 데이터는 사라지지 않음

파드 배포된 워커노드 drain 해서 문제 확인 → 다시 원복

# 모니터링

watch kubectl get pod,pv,pvc -owide

# 디플로이먼트

cat ~/pkos/3/localpath-fail.yaml | yh

kubectl apply -f ~/pkos/3/localpath-fail.yaml

# 배포 확인

kubectl exec deploy/date-pod -- cat /data/out.txt

Fri Feb 3 06:50:21 UTC 2023

# 파드가 배포된 워커노드 변수 지정

PODNODE=$(kubectl get pod -l app=date -o jsonpath={.items[0].spec.nodeName})

echo $PODNODE

i-0af578857c81d79f3

# 파드가 배포된 워커노드에 장애유지 보수를 위한 drain 설정

kubectl drain $PODNODE --force --ignore-daemonsets --delete-emptydir-data && kubectl get pod -w

# 상태 확인

kubectl get node

kubectl get deploy/date-pod

kubectl describe pod -l app=date | grep Events: -A5

# local-path 스토리지클래스에서 생성되는 PV 에 Node Affinity 설정 확인

kubectl describe pv

...

Node Affinity:

Required Terms:

Term 0: kubernetes.io/hostname in [i-0af578857c81d79f3]

...

# 파드가 배포된 워커노드에 장애유지 보수를 완료 후 uncordon 정상 상태로 원복 Failback

kubectl uncordon $PODNODE && kubectl get pod -w

kubectl exec deploy/date-pod -- cat /data/out.txt

Fri Feb 3 06:50:21 UTC 2023

Fri Feb 3 06:50:26 UTC 2023

Fri Feb 3 06:50:31 UTC 2023

Fri Feb 3 06:50:36 UTC 2023

Fri Feb 3 06:50:41 UTC 2023

Fri Feb 3 06:50:46 UTC 2023

Fri Feb 3 06:50:51 UTC 2023

Fri Feb 3 06:50:56 UTC 2023

Fri Feb 3 06:51:01 UTC 2023

Fri Feb 3 06:51:06 UTC 2023

Fri Feb 3 06:51:11 UTC 2023

Fri Feb 3 06:53:04 UTC 2023

Fri Feb 3 06:53:09 UTC 2023

Fri Feb 3 06:53:14 UTC 2023

다음 실습을 위해서 파드와 PVC 삭제

# 파드와 PVC 삭제

kubectl delete deploy/date-pod

kubectl delete pvc localpath-claim

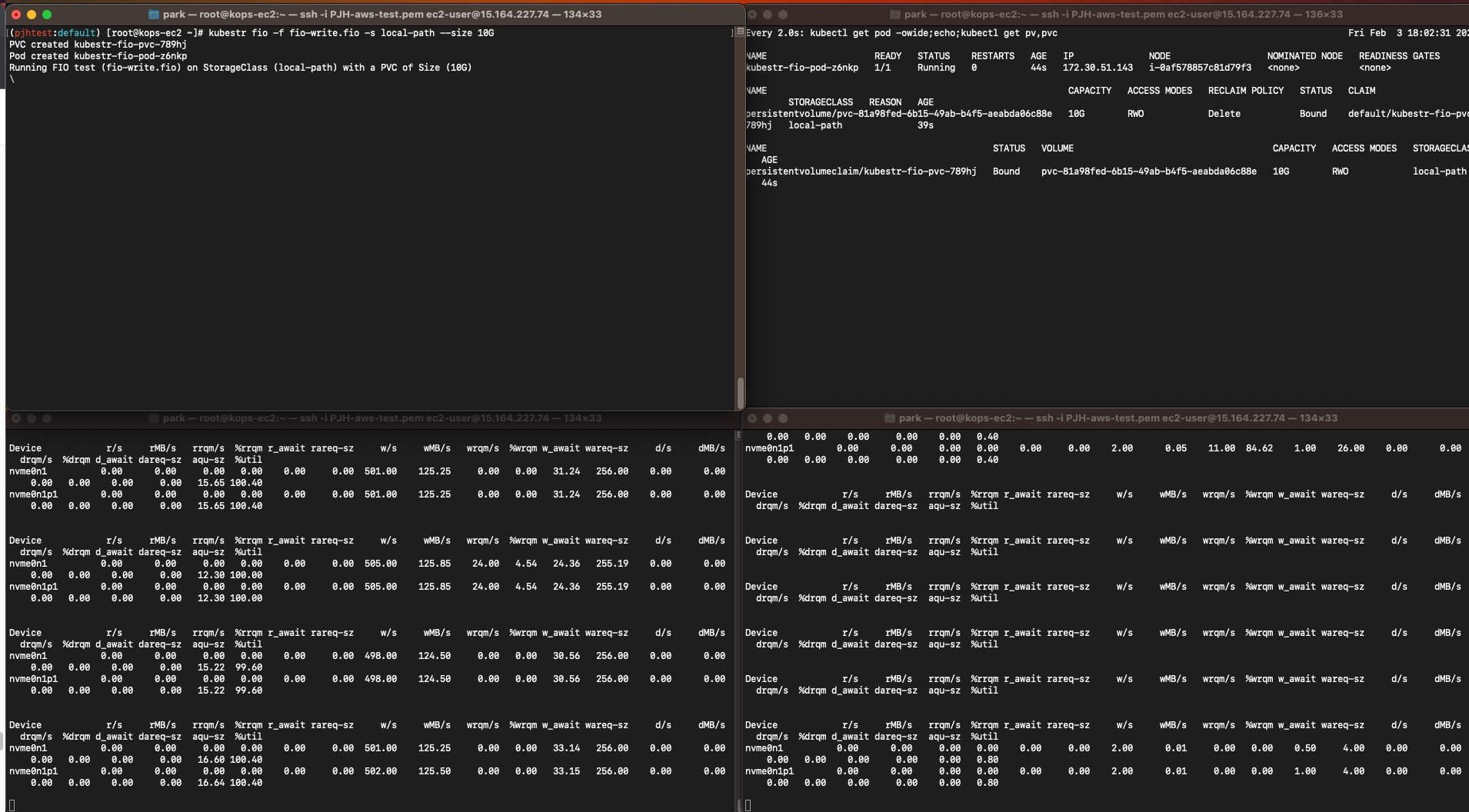

Kubestr & sar 모니터링 및 성능 측정 확인 (NVMe SSD) -

https://kubestr.io/

한글 https://flavono123.github.io/posts/kubestr-and-monitoring-tools/

Kubestr 이용한 성능 측정 - https://kubestr.io/ ⇒ local-path 와 NFS 등 스토리지 클래스의 IOPS 차이를 확인

# kubestr 툴 다운로드

wget https://github.com/kastenhq/kubestr/releases/download/v0.4.36/kubestr_0.4.36_Linux_amd64.tar.gz

tar xvfz kubestr_0.4.36_Linux_amd64.tar.gz && mv kubestr /usr/local/bin/ && chmod +x /usr/local/bin/kubestr

# 스토리지클래스 점검

kubestr -h

kubestr

# 모니터링

watch 'kubectl get pod -owide;echo;kubectl get pv,pvc'

ssh -i ~/.ssh/id_rsa ubuntu@$W1PIP iostat -xmdz 1 -p nvme0n1

ssh -i ~/.ssh/id_rsa ubuntu@$W2PIP iostat -xmdz 1 -p nvme0n1

--------------------------------------------------------------

# rrqm/s : 초당 드라이버 요청 대기열에 들어가 병합된 읽기 요청 횟수

# wrqm/s : 초당 드라이버 요청 대기열에 들어가 병합된 쓰기 요청 횟수

# r/s : 초당 디스크 장치에 요청한 읽기 요청 횟수

# w/s : 초당 디스크 장치에 요청한 쓰기 요청 횟수

# rMB/s : 초당 디스크 장치에서 읽은 메가바이트 수

# wMB/s : 초당 디스크 장치에 쓴 메가바이트 수

# await : 가장 중요한 지표, 평균 응답 시간. 드라이버 요청 대기열에서 기다린 시간과 장치의 I/O 응답시간을 모두 포함 (단위: ms)

iostat -xmdz 1 -p xvdf

Device: rrqm/s wrqm/s r/s w/s rMB/s wMB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

xvdf 0.00 0.00 2637.93 0.00 10.30 0.00 8.00 6.01 2.28 2.28 0.00 0.33 86.21

--------------------------------------------------------------

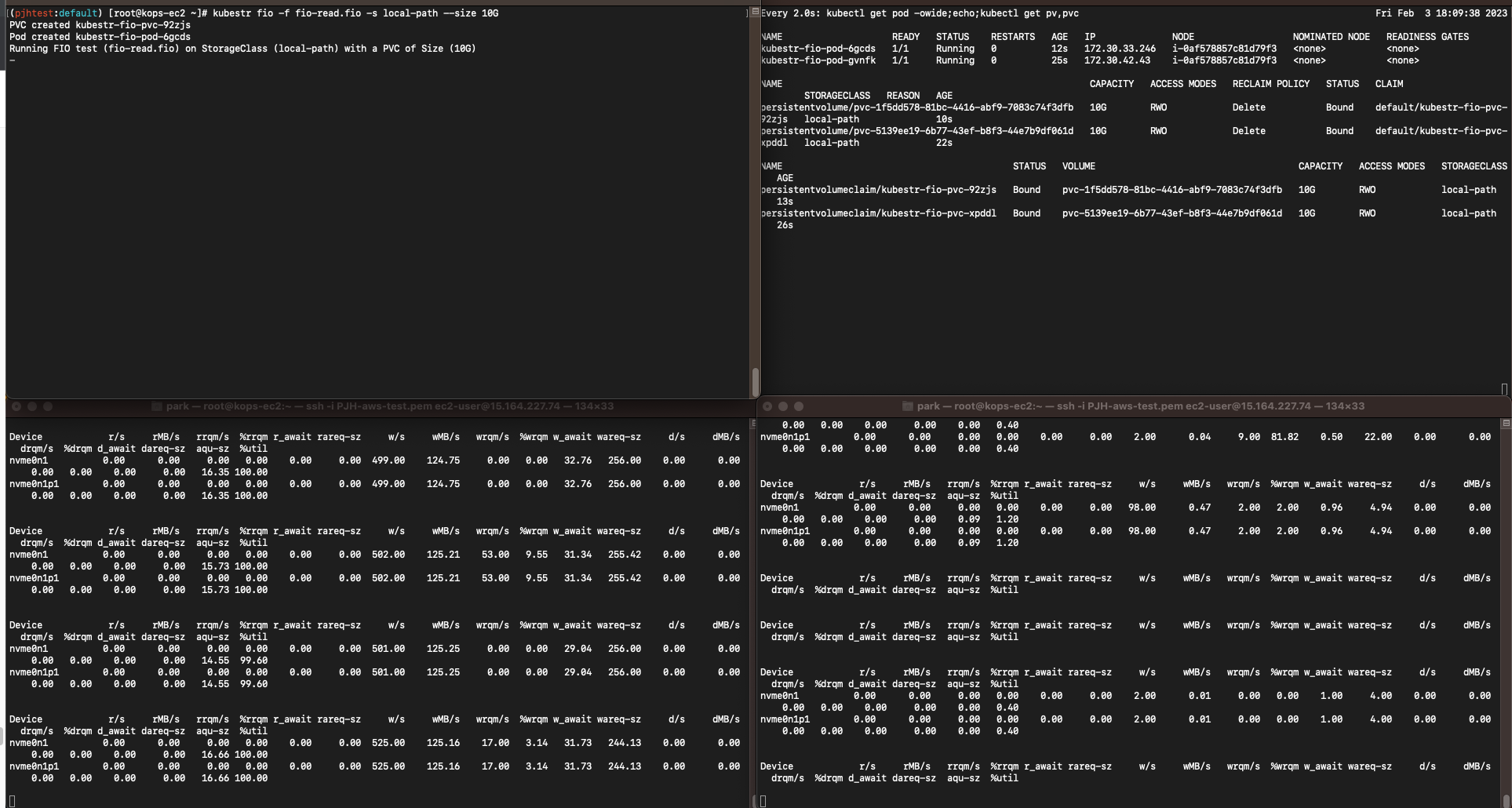

# 측정

curl -s -O https://raw.githubusercontent.com/wikibook/kubepractice/main/ch10/fio-read.fio

kubestr fio -f fio-read.fio -s local-path --size 10G

curl -s -O https://raw.githubusercontent.com/wikibook/kubepractice/main/ch10/fio-write.fio

kubestr fio -f fio-write.fio -s local-path --size 10G

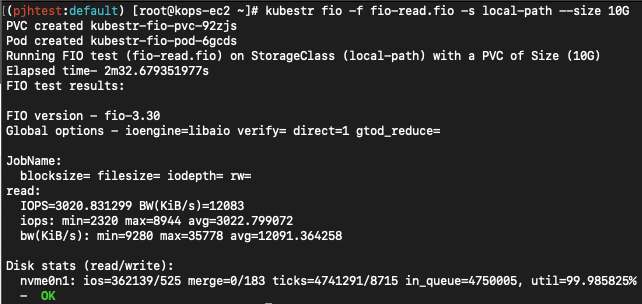

# 4k 디스크 블록 기준 Read 평균 IOPS는 3022

kubestr fio -f fio-read.fio -s local-path --size 10G

PVC created kubestr-fio-pvc-92zjs

Pod created kubestr-fio-pod-6gcds

Running FIO test (fio-read.fio) on StorageClass (local-path) with a PVC of Size (10G)

Elapsed time- 2m32.679351977s

FIO test results:

FIO version - fio-3.30

Global options - ioengine=libaio verify= direct=1 gtod_reduce=

JobName:

blocksize= filesize= iodepth= rw=

read:

IOPS=3020.831299 BW(KiB/s)=12083

iops: min=2320 max=8944 avg=3022.799072

bw(KiB/s): min=9280 max=35778 avg=12091.364258

Disk stats (read/write):

nvme0n1: ios=362139/525 merge=0/183 ticks=4741291/8715 in_queue=4750005, util=99.985825%

- OK

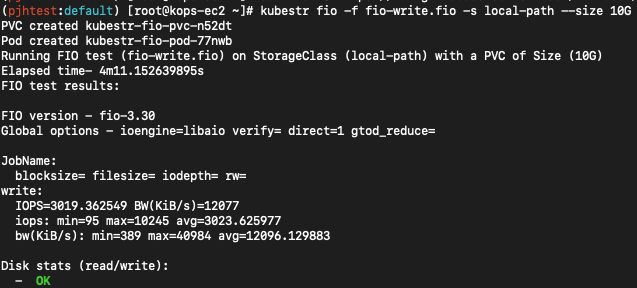

# 4k 디스크 블록 기준 Write 평균 IOPS는 3026

kubestr fio -f fio-write.fio -s local-path --size 10G

PVC created kubestr-fio-pvc-789hj

Pod created kubestr-fio-pod-z6nkp

Running FIO test (fio-write.fio) on StorageClass (local-path) with a PVC of Size (10G)

Elapsed time- 4m11.147954151s

FIO test results:

FIO version - fio-3.30

Global options - ioengine=libaio verify= direct=1 gtod_reduce=

JobName:

blocksize= filesize= iodepth= rw=

write:

IOPS=3020.637207 BW(KiB/s)=12082

iops: min=91 max=10459 avg=3026.595215

bw(KiB/s): min=367 max=41840 avg=12107.130859

Disk stats (read/write):

- OK

AWS EBS Controller

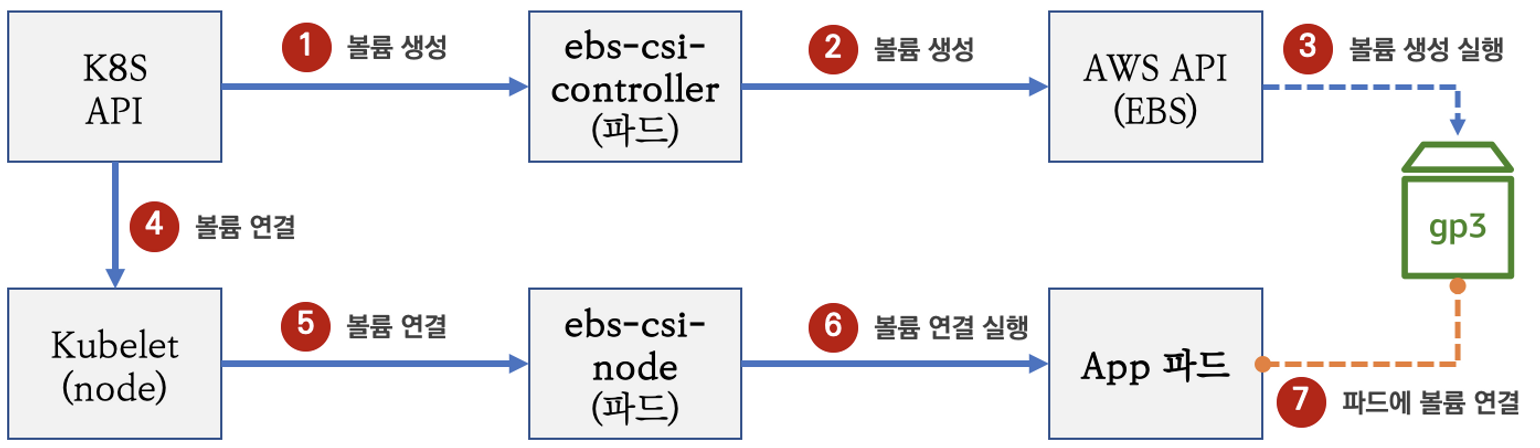

Volume (ebs-csi-controller) : EBS CSI driver 동작 : 볼륨 생성 및 파드에 볼륨 연결

PV PVC 파드 테스트

# kOps 설치 시 기본 배포됨

kubectl get pod -n kube-system -l app.kubernetes.io/instance=aws-ebs-csi-driver

NAME READY STATUS RESTARTS AGE

ebs-csi-controller-654b79cdb6-fvnrb 5/5 Running 0 126m

ebs-csi-node-b7s9x 3/3 Running 0 125m

ebs-csi-node-qbrqb 3/3 Running 0 126m

ebs-csi-node-qhbjl 3/3 Running 0 125m

# 스토리지 클래스 확인

kubectl get sc kops-csi-1-21 kops-ssd-1-17

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

kops-csi-1-21 (default) ebs.csi.aws.com Delete WaitForFirstConsumer true 127m

kops-ssd-1-17 kubernetes.io/aws-ebs Delete WaitForFirstConsumer true 127m

kubectl describe sc kops-csi-1-21 | grep Parameters

Parameters: encrypted=true,type=gp3

kubectl describe sc kops-ssd-1-17 | grep Parameters

Parameters: encrypted=true,type=gp2

# 워커노드의 EBS 볼륨 확인 : tag(키/값) 필터링

aws ec2 describe-volumes --filters Name=tag:k8s.io/role/node,Values=1 --output table

aws ec2 describe-volumes --filters Name=tag:k8s.io/role/node,Values=1 --query "Volumes[*].Attachments" | jq

aws ec2 describe-volumes --filters Name=tag:k8s.io/role/node,Values=1 --query "Volumes[*].Attachments[*].State" | jq

aws ec2 describe-volumes --filters Name=tag:k8s.io/role/node,Values=1 --query "Volumes[*].Attachments[*].State" --output text

aws ec2 describe-volumes --filters Name=tag:k8s.io/role/node,Values=1 --query "Volumes[*].Attachments[].State" --output text

aws ec2 describe-volumes --filters Name=tag:k8s.io/role/node,Values=1 --query "Volumes[*].Attachments[?State=='attached'].VolumeId[]" | jq

aws ec2 describe-volumes --filters Name=tag:k8s.io/role/node,Values=1 --query "Volumes[*].Attachments[?State=='attached'].VolumeId[]" --output text

aws ec2 describe-volumes --filters Name=tag:k8s.io/role/node,Values=1 --query "Volumes[*].Attachments[?State=='attached'].InstanceId[]" | jq

aws ec2 describe-volumes --filters Name=tag:k8s.io/role/node,Values=1 --query "Volumes[*].{ID:VolumeId,Tag:Tags}" | jq

aws ec2 describe-volumes --filters Name=tag:k8s.io/role/node,Values=1 --query "Volumes[].[VolumeId, VolumeType]" | jq

aws ec2 describe-volumes --filters Name=tag:k8s.io/role/node,Values=1 --query "Volumes[].[VolumeId, VolumeType, Attachments[].[InstanceId, State]]" | jq

aws ec2 describe-volumes --filters Name=tag:k8s.io/role/node,Values=1 --query "Volumes[].[VolumeId, VolumeType, Attachments[].[InstanceId, State][]][]" | jq

aws ec2 describe-volumes --filters Name=tag:k8s.io/role/node,Values=1 --query "Volumes[].{VolumeId: VolumeId, VolumeType: VolumeType, InstanceId: Attachments[0].InstanceId, State: Attachments[0].State}" | jq

# 워커노드에서 파드에 추가한 EBS 볼륨 확인

aws ec2 describe-volumes --filters Name=tag:ebs.csi.aws.com/cluster,Values=true --output table

aws ec2 describe-volumes --filters Name=tag:ebs.csi.aws.com/cluster,Values=true --query "Volumes[*].{ID:VolumeId,Tag:Tags}" | jq

aws ec2 describe-volumes --filters Name=tag:ebs.csi.aws.com/cluster,Values=true --query "Volumes[].{VolumeId: VolumeId, VolumeType: VolumeType, InstanceId: Attachments[0].InstanceId, State: Attachments[0].State}" | jq

# 워커노드에서 파드에 추가한 EBS 볼륨 모니터링

while true; do aws ec2 describe-volumes --filters Name=tag:ebs.csi.aws.com/cluster,Values=true --query "Volumes[].{VolumeId: VolumeId, VolumeType: VolumeType, InstanceId: Attachments[0].InstanceId, State: Attachments[0].State}" --output text; date; sleep 1; done

# PVC 생성

cat ~/pkos/3/awsebs-pvc.yaml | yh

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: ebs-claim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 4Gi

kubectl apply -f ~/pkos/3/awsebs-pvc.yaml

# 파드 생성

cat ~/pkos/3/awsebs-pod.yaml | yh

apiVersion: v1

kind: Pod

metadata:

name: app

spec:

terminationGracePeriodSeconds: 3

containers:

- name: app

image: centos

command: ["/bin/sh"]

args: ["-c", "while true; do echo $(date -u) >> /data/out.txt; sleep 5; done"]

volumeMounts:

- name: persistent-storage

mountPath: /data

volumes:

- name: persistent-storage

persistentVolumeClaim:

claimName: ebs-claim

kubectl apply -f ~/pkos/3/awsebs-pod.yaml

# PVC, 파드 확인

kubectl get pvc,pv,pod

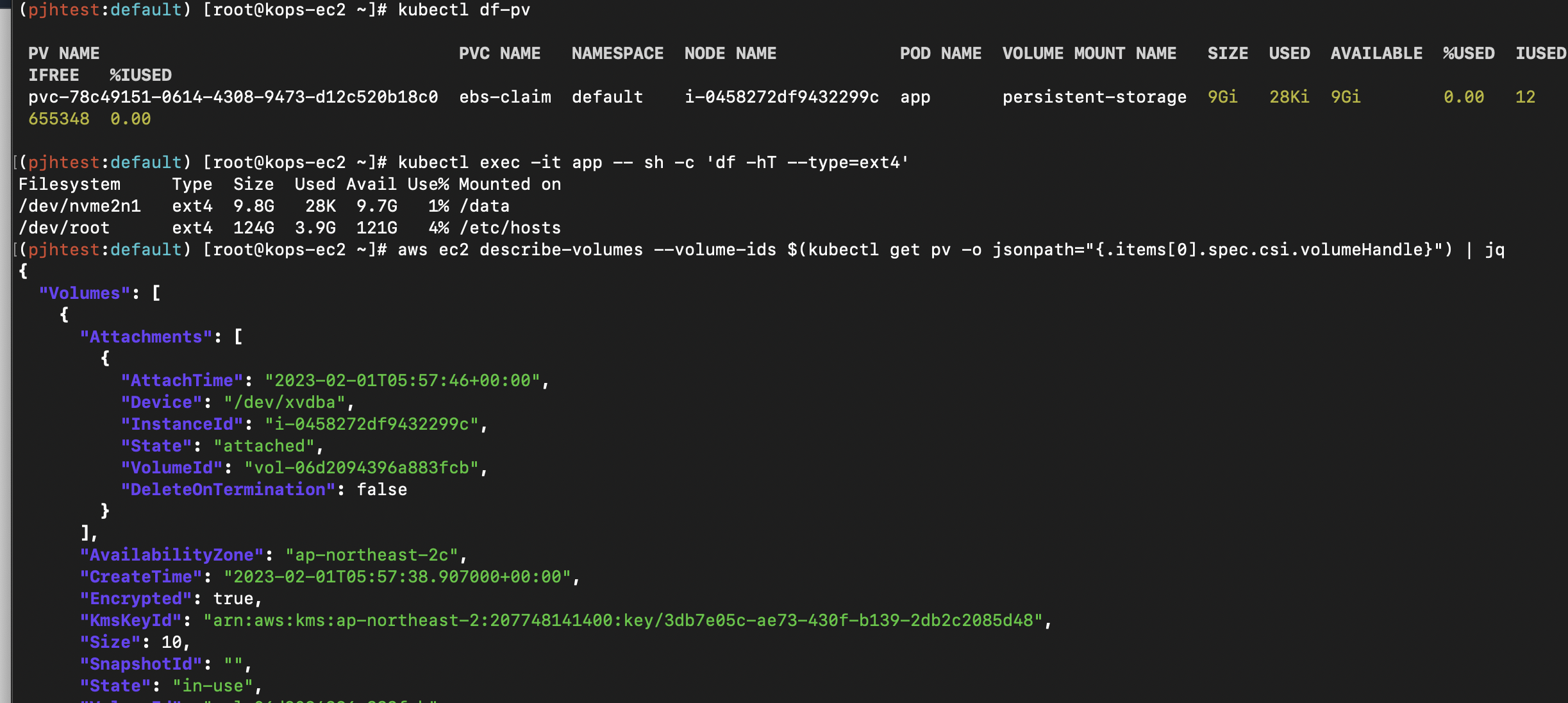

kubectl df-pv

# 추가된 EBS 볼륨 상세 정보 확인

aws ec2 describe-volumes --volume-ids $(kubectl get pv -o jsonpath="{.items[0].spec.csi.volumeHandle}") | jq

# 파일 내용 추가 저장 확인

kubectl exec app -- tail -f /data/out.txt

# 파드 내에서 볼륨 정보 확인

kubectl exec -it app -- sh -c 'df -hT --type=ext4'

Filesystem Type Size Used Avail Use% Mounted on

/dev/nvme2n1 ext4 3.8G 28K 3.8G 1% /data

/dev/root ext4 124G 3.9G 121G 4% /etc/hosts

볼륨 증가 ⇒ 늘릴수는 있어도 줄일수는 없다!

# 현재 pv 의 이름을 기준하여 4G > 10G 로 증가 : .spec.resources.requests.storage의 4Gi 를 10Gi로 변경

kubectl get pvc ebs-claim -o jsonpath={.spec.resources.requests.storage} ; echo

kubectl get pvc ebs-claim -o jsonpath={.status.capacity.storage} ; echo

kubectl patch pvc ebs-claim -p '{"spec":{"resources":{"requests":{"storage":"10Gi"}}}}'

# 확인 : 수치 반영이 조금 느릴수 있다

kubectl exec -it app -- sh -c 'df -hT --type=ext4'

Filesystem Type Size Used Avail Use% Mounted on

/dev/nvme2n1 ext4 9.8G 28K 9.7G 1% /data

/dev/root ext4 124G 3.9G 121G 4% /etc/hosts

kubectl df-pv

PV NAME PVC NAME NAMESPACE NODE NAME POD NAME VOLUME MOUNT NAME SIZE USED AVAILABLE %USED IUSED IFREE %IUSED

pvc-78c49151-0614-4308-9473-d12c520b18c0 ebs-claim default i-0458272df9432299c app persistent-storage 9Gi 28Ki 9Gi 0.00 12 655348 0.00

aws ec2 describe-volumes --volume-ids $(kubectl get pv -o jsonpath="{.items[0].spec.csi.volumeHandle}") | jq

{

"Volumes": [

{

"Attachments": [

{

"AttachTime": "2023-02-01T05:57:46+00:00",

"Device": "/dev/xvdba",

"InstanceId": "i-0458272df9432299c",

"State": "attached",

"VolumeId": "vol-06d2094396a883fcb",

"DeleteOnTermination": false

}

],

"AvailabilityZone": "ap-northeast-2c",

"CreateTime": "2023-02-01T05:57:38.907000+00:00",

"Encrypted": true,

"KmsKeyId": "arn:aws:kms:ap-northeast-2:207748141400:key/3db7e05c-ae73-430f-b139-2db2c2085d48",

"Size": 10,

"SnapshotId": "",

"State": "in-use",

"VolumeId": "vol-06d2094396a883fcb",

"Iops": 3000,

"Tags": [

{

.........................

과제 3

목표 : AWS EBS를 PVC로 사용 후 온라인 볼륨 증가 후 관련 스샷 올려주세요

위에서 진행완료!

삭제

kubectl delete pod app & kubectl delete pvc ebs-claim

AWS Volume SnapShots Controller

스냅샷 컨트롤러 설치

# kOps 클러스터 편집

kops edit cluster

-----

spec:

snapshotController:

enabled: true certManager: # 이미 설치됨 enabled: true # 이미 설치됨

-----

# 업데이트 적용

kops update cluster --yes && sleep 3 && kops rolling-update cluster

# 확인 >> 배포 시 3분 정도 소요됨

watch kubectl get pod -n kube-system

kubectl get crd | grep volumesnapshot

volumesnapshotclasses.snapshot.storage.k8s.io 2023-02-01T06:09:27Z

volumesnapshotcontents.snapshot.storage.k8s.io 2023-02-01T06:09:27Z

volumesnapshots.snapshot.storage.k8s.io 2023-02-01T06:09:27Z

# vsclass 생성

kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/aws-ebs-csi-driver/master/examples/kubernetes/snapshot/manifests/classes/snapshotclass.yaml

kubectl get volumesnapshotclass

NAME DRIVER DELETIONPOLICY AGE

csi-aws-vsc ebs.csi.aws.com Delete 5s

테스트 PVC/파드 생성

# PVC 생성

kubectl apply -f ~/pkos/3/awsebs-pvc.yaml

# 파드 생성

kubectl apply -f ~/pkos/3/awsebs-pod.yaml

# VolumeSnapshot 생성 : Create a VolumeSnapshot referencing the PersistentVolumeClaim name

cat ~/pkos/3/ebs-volume-snapshot.yaml | yh

apiVersion: snapshot.storage.k8s.io/v1

kind: VolumeSnapshot

metadata:

name: ebs-volume-snapshot

spec:

volumeSnapshotClassName: csi-aws-vsc

source:

persistentVolumeClaimName: ebs-claim

kubectl apply -f ~/pkos/3/ebs-volume-snapshot.yaml

# 파일 내용 추가 저장 확인

kubectl exec app -- tail -f /data/out.txt

# VolumeSnapshot 확인 (시간좀 걸림)

kubectl get volumesnapshot

NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE

ebs-volume-snapshot true ebs-claim 4Gi csi-aws-vsc snapcontent-0968cecb-c325-4172-aa7d-c8ecb430a687 71s 72s

kubectl get volumesnapshot ebs-volume-snapshot -o jsonpath={.status.boundVolumeSnapshotContentName}

snapcontent-0968cecb-c325-4172-aa7d-c8ecb430a687

kubectl describe volumesnapshot.snapshot.storage.k8s.io ebs-volume-snapshot

# AWS EBS 스냅샷 확인

aws ec2 describe-snapshots --owner-ids self | jq

aws ec2 describe-snapshots --owner-ids self --query 'Snapshots[]' --output table

------------------------------------------------------------------------------------------------------------------

| DescribeSnapshots |

+---------------+------------------------------------------------------------------------------------------------+

| Description | Created by AWS EBS CSI driver for volume vol-0de1a757300f69eb8 |

| Encrypted | True |

| KmsKeyId | arn:aws:kms:ap-northeast-2:207748141400:key/3db7e05c-ae73-430f-b139-2db2c2085d48 |

| OwnerId | 207748141400 |

| Progress | 100% |

| SnapshotId | snap-0bd73fad54496691f |

| StartTime | 2023-02-01T07:54:23.202000+00:00 |

| State | completed |

| StorageTier | standard |

| VolumeId | vol-0de1a757300f69eb8 |

| VolumeSize | 4 |

+---------------+------------------------------------------------------------------------------------------------+

|| Tags ||

|+--------------------------------------+-----------------------------------------------------------------------+|

|| Key | Value ||

|+--------------------------------------+-----------------------------------------------------------------------+|

|| KubernetesCluster | pjhtest.click ||

|| kubernetes.io/cluster/pjhtest.click | owned ||

|| Name | pjhtest.click-dynamic-snapshot-0968cecb-c325-4172-aa7d-c8ecb430a687 ||

|| ebs.csi.aws.com/cluster | true ||

|| CSIVolumeSnapshotName | snapshot-0968cecb-c325-4172-aa7d-c8ecb430a687 ||

|+--------------------------------------+-----------------------------------------------------------------------+|

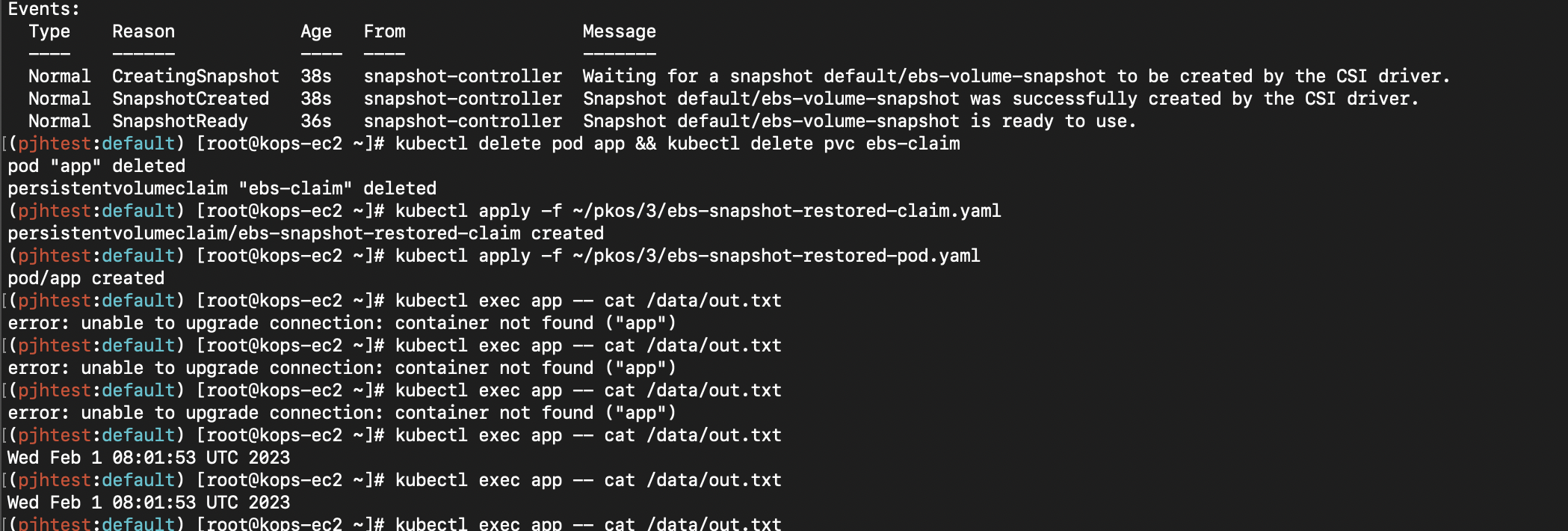

# app & pvc 제거 : 강제로 장애 재현

kubectl delete pod app && kubectl delete pvc ebs-claim

스냅샷으로 복원

# 스냅샷에서 PVC 로 복원

cat ~/pkos/3/ebs-snapshot-restored-claim.yaml | yh

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: ebs-snapshot-restored-claim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 4Gi

dataSource:

name: ebs-volume-snapshot

kind: VolumeSnapshot

apiGroup: snapshot.storage.k8s.io

kubectl apply -f ~/pkos/3/ebs-snapshot-restored-claim.yaml

# 확인

kubectl get pvc,pv

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/ebs-snapshot-restored-claim Pending kops-csi-1-21 4s

# 파드 생성

cat ~/pkos/3/ebs-snapshot-restored-pod.yaml | yh

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: ebs-snapshot-restored-claim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 4Gi

dataSource:

name: ebs-volume-snapshot

kind: VolumeSnapshot

apiGroup: snapshot.storage.k8s.io

kubectl apply -f ~/pkos/3/ebs-snapshot-restored-pod.yaml

# 파일 내용 저장 확인 : 파드 삭제 전까지의 저장 기록이 남아 있다. 이후 파드 재생성 후 기록도 잘 저장되고 있다

kubectl exec app -- cat /data/out.txt

...

Wed Feb 1 08:01:53 UTC 2022

...

# 삭제

kubectl delete pod app && kubectl delete pvc ebs-snapshot-restored-claim && kubectl delete volumesnapshots ebs-volume-snapshot

과제 4

목표 : AWS Volume SnapShots 실습 후 관련 스샷 올려주세요

위에서 진행함!

(실습 완료 후) 자원 삭제

kOps 클러스터 삭제 & AWS CloudFormation 스택 삭제

kops delete cluster --yes && aws cloudformation delete-stack --stack-name mykops

'KOPS' 카테고리의 다른 글

| PKOS 6주차 - Alerting 얼럿매니저 로깅시스템 (0) | 2023.02.23 |

|---|---|

| PKOS 5주차 - 프로메테우스 그라파나 (0) | 2023.02.14 |

| PKOS 4주차 - Harbor Gitlab ArgoCD (0) | 2023.02.07 |

| PKOS(쿠버네티스)2주차 - 쿠버네티스 네트워크 (0) | 2023.01.26 |

| PKOS(쿠버네티스) 1주차 - AWS kOps 설치 및 기본 사용 (4) | 2023.01.11 |