카이도스의 Tech Blog

Terraform EKS - 02(Network, Storage) 본문

728x90

반응형

2025.02.10 - [EKS] - Terraform EKS - 01(VPC)

Terraform EKS - 01(VPC)

2023.04.26 - [EKS] - EKS 1주차 - Amzaon EKS 설치 및 기본 사용 EKS 1주차 - Amzaon EKS 설치 및 기본 사용CloudNet@-가시다(Gasida)님의 EKS 스터디를 기준으로 작성됩니다. Amazon EKS 소개 - 참고링크 Amazon EKS 소개 -

djdakf1234.tistory.com

시간날때마다 진행하는거라 IP가 계속 바뀔수있습니다.

비용때문에 삭제 후 진행시 재생성해서 진행하는식으로 했습니다. ㅎㅎ

기존과 변경 사항은 네트워크 대역 변경 및 iam-tf 추가 입니다.

- VPC 구성은 172.20.0.0/16

| 이름 | IP CIDR | 가용 영역 | 확인 |

| eks-public-01 | 172.20.10.0/20 | ap-northeast-2a | 신규 |

| eks-public-02 | 172.20.30.0/20 | ap-northeast-2b | 신규 |

| eks-public-03 | 172.20.50.0/20 | ap-northeast-2c | 신규 |

| eks-public-04 | 172.20.70.0/20 | ap-northeast-2d | 신규 |

| eks-private-01 | 172.20.110.0/20 | ap-northeast-2a | 신규 |

| eks-private-02 | 172.20.130.0/20 | ap-northeast-2b | 신규 |

| eks-private-03 | 172.20.150.0/20 | ap-northeast-2c | 신규 |

| eks-private-04 | 172.20.170.0/20 | ap-northeast-2d | 신규 |

1. 기본 환경 배포

- 기본 환경 CloudFormation 배포

# CloudFormation 스택 배포

aws cloudformation create-stack \

--stack-name my-basic-infra \

--template-body file://basic_infra.yaml

# [모니터링] CloudFormation 스택 상태

while true; do

date

AWS_PAGER="" aws cloudformation list-stacks \

--stack-status-filter CREATE_IN_PROGRESS CREATE_COMPLETE CREATE_FAILED DELETE_IN_PROGRESS DELETE_FAILED \

--query "StackSummaries[*].{StackName:StackName, StackStatus:StackStatus}" \

--output table

sleep 1

done

2025년 2월 12일 수요일 15시 40분 07초 KST

---------------------------------------

| ListStacks |

+-----------------+-------------------+

| StackName | StackStatus |

+-----------------+-------------------+

| my-basic-infra | CREATE_COMPLETE |

+-----------------+-------------------+

- variable.tf

variable "KeyName" {

description = "Name of an existing EC2 KeyPair to enable SSH access to the instances."

type = string

}

variable "MyDomain" {

description = "Your Domain Name."

type = string

}

variable "MyIamUserAccessKeyID" {

description = "IAM User - AWS Access Key ID."

type = string

sensitive = true

}

variable "MyIamUserSecretAccessKey" {

description = "IAM User - AWS Secret Access Key."

type = string

sensitive = true

}

variable "SgIngressSshCidr" {

description = "The IP address range that can be used to SSH to the EC2 instances."

type = string

validation {

condition = can(regex("^(\\d{1,3})\\.(\\d{1,3})\\.(\\d{1,3})\\.(\\d{1,3})/(\\d{1,2})$", var.SgIngressSshCidr))

error_message = "The SgIngressSshCidr value must be a valid IP CIDR range of the form x.x.x.x/x."

}

}

variable "MyInstanceType" {

description = "EC2 instance type."

type = string

default = "t3.medium"

validation {

condition = contains(["t2.micro", "t2.small", "t2.medium", "t3.micro", "t3.small", "t3.medium"], var.MyInstanceType)

error_message = "Invalid instance type. Valid options are t2.micro, t2.small, t2.medium, t3.micro, t3.small, t3.medium."

}

}

variable "ClusterBaseName" {

description = "Base name of the cluster."

type = string

default = "pjh-dev-eks"

}

variable "KubernetesVersion" {

description = "Kubernetes version for the EKS cluster."

type = string

default = "1.30"

}

variable "WorkerNodeInstanceType" {

description = "EC2 instance type for the worker nodes."

type = string

default = "t3.medium"

}

variable "WorkerNodeCount" {

description = "Number of worker nodes."

type = number

default = 3

}

variable "WorkerNodeVolumesize" {

description = "Volume size for worker nodes (in GiB)."

type = number

default = 30

}

variable "TargetRegion" {

description = "AWS region where the resources will be created."

type = string

default = "ap-northeast-2"

}

variable "availability_zones" {

description = "List of availability zones."

type = list(string)

default = ["ap-northeast-2a", "ap-northeast-2b", "ap-northeast-2c", "ap-northeast-2d"]

}

- ec2.tf

data "aws_ssm_parameter" "ami" {

name = "/aws/service/canonical/ubuntu/server/22.04/stable/current/amd64/hvm/ebs-gp2/ami-id"

}

resource "aws_security_group" "eks_sec_group" {

vpc_id = data.aws_vpc.service_vpc.id

name = "${var.ClusterBaseName}-bastion-sg"

description = "Security group for ${var.ClusterBaseName} Host"

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = [var.SgIngressSshCidr]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Name = "${var.ClusterBaseName}-HOST-SG"

}

}

resource "aws_instance" "eks_bastion" {

ami = data.aws_ssm_parameter.ami.value

instance_type = var.MyInstanceType

key_name = var.KeyName

subnet_id = data.aws_subnet.eks_public_1.id

associate_public_ip_address = true

private_ip = "172.20.10.100"

vpc_security_group_ids = [aws_security_group.eks_sec_group.id]

tags = {

Name = "${var.ClusterBaseName}-bastion-EC2"

}

root_block_device {

volume_type = "gp3"

volume_size = 30

delete_on_termination = true

}

user_data = <<-EOF

#!/bin/bash

hostnamectl --static set-hostname "${var.ClusterBaseName}-bastion-EC2"

# Config convenience

echo 'alias vi=vim' >> /etc/profile

echo "sudo su -" >> /home/ubuntu/.bashrc

timedatectl set-timezone Asia/Seoul

# Install Packages

apt update

apt install -y tree jq git htop unzip

# Install kubectl & helm

curl -O https://s3.us-west-2.amazonaws.com/amazon-eks/1.30.0/2024-05-12/bin/linux/amd64/kubectl

install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

curl -s https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3 | bash

# Install eksctl

curl -sL "https://github.com/eksctl-io/eksctl/releases/latest/download/eksctl_Linux_amd64.tar.gz" | tar xz -C /tmp

mv /tmp/eksctl /usr/local/bin

# Install aws cli v2

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

unzip awscliv2.zip >/dev/null 2>&1

./aws/install

complete -C '/usr/local/bin/aws_completer' aws

echo 'export AWS_PAGER=""' >> /etc/profile

echo "export AWS_DEFAULT_REGION=${var.TargetRegion}" >> /etc/profile

# Install YAML Highlighter

wget https://github.com/andreazorzetto/yh/releases/download/v0.4.0/yh-linux-amd64.zip

unzip yh-linux-amd64.zip

mv yh /usr/local/bin/

# Install kube-ps1

echo 'source <(kubectl completion bash)' >> /root/.bashrc

echo 'alias k=kubectl' >> /root/.bashrc

echo 'complete -F __start_kubectl k' >> /root/.bashrc

git clone https://github.com/jonmosco/kube-ps1.git /root/kube-ps1

cat <<"EOT" >> /root/.bashrc

source /root/kube-ps1/kube-ps1.sh

KUBE_PS1_SYMBOL_ENABLE=false

function get_cluster_short() {

echo "$1" | grep -o '${var.ClusterBaseName}[^/]*' | cut -c 1-14

}

KUBE_PS1_CLUSTER_FUNCTION=get_cluster_short

KUBE_PS1_SUFFIX=') '

PS1='$(kube_ps1)'$PS1

EOT

# kubecolor

apt install kubecolor

echo 'alias kubectl=kubecolor' >> /root/.bashrc

# Install kubectx & kubens

git clone https://github.com/ahmetb/kubectx /opt/kubectx >/dev/null 2>&1

ln -s /opt/kubectx/kubens /usr/local/bin/kubens

ln -s /opt/kubectx/kubectx /usr/local/bin/kubectx

# Install Docker

curl -fsSL https://get.docker.com -o get-docker.sh

sh get-docker.sh

systemctl enable docker

# Create SSH Keypair

ssh-keygen -t rsa -N "" -f /root/.ssh/id_rsa

# IAM User Credentials

export AWS_ACCESS_KEY_ID="${var.MyIamUserAccessKeyID}"

export AWS_SECRET_ACCESS_KEY="${var.MyIamUserSecretAccessKey}"

export ACCOUNT_ID=$(aws sts get-caller-identity --query 'Account' --output text)

echo "export AWS_ACCESS_KEY_ID=$AWS_ACCESS_KEY_ID" >> /etc/profile

echo "export AWS_SECRET_ACCESS_KEY=$AWS_SECRET_ACCESS_KEY" >> /etc/profile

echo "export ACCOUNT_ID=$(aws sts get-caller-identity --query 'Account' --output text)" >> /etc/profile

# CLUSTER_NAME

export CLUSTER_NAME="${var.ClusterBaseName}"

echo "export CLUSTER_NAME=$CLUSTER_NAME" >> /etc/profile

# VPC & Subnet

export VPCID=$(aws ec2 describe-vpcs --filters "Name=tag:Name,Values=service" | jq -r .Vpcs[].VpcId)

echo "export VPCID=$VPCID" >> /etc/profile

export PublicSubnet1=$(aws ec2 describe-subnets --filters "Name=tag:Name,Values=service-public-01" | jq -r '.Subnets[] | select(.CidrBlock | startswith("172.31")).SubnetId')

export PublicSubnet2=$(aws ec2 describe-subnets --filters "Name=tag:Name,Values=service-public-02" | jq -r '.Subnets[] | select(.CidrBlock | startswith("172.31")).SubnetId')

export PublicSubnet3=$(aws ec2 describe-subnets --filters "Name=tag:Name,Values=service-public-03" | jq -r '.Subnets[] | select(.CidrBlock | startswith("172.31")).SubnetId')

echo "export PublicSubnet1=$PublicSubnet1" >> /etc/profile

echo "export PublicSubnet2=$PublicSubnet2" >> /etc/profile

echo "export PublicSubnet3=$PublicSubnet3" >> /etc/profile

export PrivateSubnet1=$(aws ec2 describe-subnets --filters "Name=tag:Name,Values=eks-private-01" | jq -r '.Subnets[] | select(.CidrBlock | startswith("172.31")).SubnetId')

export PrivateSubnet2=$(aws ec2 describe-subnets --filters "Name=tag:Name,Values=eks-private-02" | jq -r '.Subnets[] | select(.CidrBlock | startswith("172.31")).SubnetId')

export PrivateSubnet3=$(aws ec2 describe-subnets --filters "Name=tag:Name,Values=eks-private-03" | jq -r '.Subnets[] | select(.CidrBlock | startswith("172.31")).SubnetId')

echo "export PrivateSubnet1=$PrivateSubnet1" >> /etc/profile

echo "export PrivateSubnet2=$PrivateSubnet2" >> /etc/profile

echo "export PrivateSubnet3=$PrivateSubnet3" >> /etc/profile

# Domain Name

export MyDomain="${var.MyDomain}"

echo "export MyDomain=$MyDomain" >> /etc/profile

# ssh key-pair

aws ec2 delete-key-pair --key-name kp_node

aws ec2 create-key-pair --key-name kp_node --query 'KeyMaterial' --output text > ~/.ssh/kp_node.pem

chmod 400 ~/.ssh/kp_node.pem

EOF

user_data_replace_on_change = true

}

- main.tf

# provider

provider "aws" {

region = var.TargetRegion

}

# caller_identity data

data "aws_caller_identity" "current" {}

# vpc data

data "aws_vpc" "service_vpc" {

tags = {

Name = "service"

}

}

# subnet data

data "aws_subnet" "eks_public_1" {

vpc_id = data.aws_vpc.service_vpc.id

tags = {

Name = "eks-public-01"

}

}

data "aws_subnet" "eks_public_2" {

vpc_id = data.aws_vpc.service_vpc.id

tags = {

Name = "eks-public-02"

}

}

data "aws_subnet" "eks_public_3" {

vpc_id = data.aws_vpc.service_vpc.id

tags = {

Name = "eks-public-03"

}

}

data "aws_subnet" "eks_private_1" {

vpc_id = data.aws_vpc.service_vpc.id

tags = {

Name = "eks-private-01"

}

}

data "aws_subnet" "eks_private_2" {

vpc_id = data.aws_vpc.service_vpc.id

tags = {

Name = "eks-private-02"

}

}

data "aws_subnet" "eks_private_3" {

vpc_id = data.aws_vpc.service_vpc.id

tags = {

Name = "eks-private-03"

}

}

# route table data

data "aws_route_table" "service_public" {

vpc_id = data.aws_vpc.service_vpc.id

tags = {

Name = "service-public"

}

}

data "aws_route_table" "service_private" {

vpc_id = data.aws_vpc.service_vpc.id

tags = {

Name = "service-private"

}

}

# node_group sg

resource "aws_security_group" "node_group_sg" {

name = "${var.ClusterBaseName}-node-group-sg"

description = "Security group for EKS Node Group"

vpc_id = data.aws_vpc.service_vpc.id

tags = {

Name = "${var.ClusterBaseName}-node-group-sg"

}

}

resource "aws_security_group_rule" "allow_ssh" {

type = "ingress"

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["172.20.10.100/32"]

security_group_id = aws_security_group.node_group_sg.id

}

# eks module

module "eks" {

source = "terraform-aws-modules/eks/aws"

version = "~>20.0"

cluster_name = var.ClusterBaseName

cluster_version = var.KubernetesVersion

cluster_endpoint_private_access = true

cluster_endpoint_public_access = false

cluster_addons = {

coredns = {

most_recent = true

}

kube-proxy = {

most_recent = true

}

vpc-cni = {

most_recent = true

configuration_values = jsonencode({

enableNetworkPolicy = "true"

})

}

eks-pod-identity-agent = {

most_recent = true

}

aws-ebs-csi-driver = {

most_recent = true

service_account_role_arn = module.irsa-ebs-csi.iam_role_arn

}

snapshot-controller = {

most_recent = true

}

aws-efs-csi-driver = {

most_recent = true

service_account_role_arn = module.irsa-efs-csi.iam_role_arn

}

aws-mountpoint-s3-csi-driver = {

most_recent = true

service_account_role_arn = module.irsa-s3-csi.iam_role_arn

}

}

vpc_id = data.aws_vpc.service_vpc.id

subnet_ids = [data.aws_subnet.eks_private_1.id, data.aws_subnet.eks_private_2.id, data.aws_subnet.eks_private_3.id]

eks_managed_node_group_defaults = {

ami_type = "AL2_x86_64"

}

# eks managed node group

eks_managed_node_groups = {

default = {

name = "${var.ClusterBaseName}-node-group"

use_name_prefix = false

instance_type = var.WorkerNodeInstanceType

desired_size = var.WorkerNodeCount

max_size = var.WorkerNodeCount + 2

min_size = var.WorkerNodeCount - 1

disk_size = var.WorkerNodeVolumesize

subnets = [data.aws_subnet.eks_private_1.id, data.aws_subnet.eks_private_2.id, data.aws_subnet.eks_private_3.id]

key_name = "kp_node"

vpc_security_group_ids = [aws_security_group.node_group_sg.id]

iam_role_name = "${var.ClusterBaseName}-node-group-eks-node-group"

iam_role_use_name_prefix = false

}

}

# Cluster access entry

enable_cluster_creator_admin_permissions = false

access_entries = {

admin = {

kubernetes_groups = []

principal_arn = "${data.aws_caller_identity.current.arn}"

policy_associations = {

myeks = {

policy_arn = "arn:aws:eks::aws:cluster-access-policy/AmazonEKSClusterAdminPolicy"

access_scope = {

namespaces = []

type = "cluster"

}

}

}

}

}

}

# cluster sg - ingress rule add

resource "aws_security_group_rule" "cluster_sg_add" {

type = "ingress"

from_port = 443

to_port = 443

protocol = "tcp"

cidr_blocks = ["172.20.0.0/16"]

security_group_id = module.eks.cluster_security_group_id

depends_on = [module.eks]

}

# shared node sg - ingress rule add

resource "aws_security_group_rule" "node_sg_add" {

type = "ingress"

from_port = 443

to_port = 443

protocol = "tcp"

cidr_blocks = ["172.20.0.0/16"]

security_group_id = module.eks.node_security_group_id

depends_on = [module.eks]

}

# vpc endpoint

module "vpc_vpc-endpoints" {

source = "terraform-aws-modules/vpc/aws//modules/vpc-endpoints"

version = "5.8.1"

vpc_id = data.aws_vpc.service_vpc.id

security_group_ids = [module.eks.node_security_group_id]

endpoints = {

# gateway endpoints

s3 = {

service = "s3"

route_table_ids = [data.aws_route_table.service_private.id]

tags = { Name = "s3-vpc-endpoint" }

}

# interface endpoints

ec2 = {

service = "ec2"

subnet_ids = [data.aws_subnet.eks_private_1.id, data.aws_subnet.eks_private_2.id, data.aws_subnet.eks_private_3.id]

tags = { Name = "ec2-vpc-endpoint" }

}

elasticloadbalancing = {

service = "elasticloadbalancing"

subnet_ids = [data.aws_subnet.eks_private_1.id, data.aws_subnet.eks_private_2.id, data.aws_subnet.eks_private_3.id]

tags = { Name = "elasticloadbalancing-vpc-endpoint" }

}

ecr_api = {

service = "ecr.api"

subnet_ids = [data.aws_subnet.eks_private_1.id, data.aws_subnet.eks_private_2.id, data.aws_subnet.eks_private_3.id]

tags = { Name = "ecr-api-vpc-endpoint" }

}

ecr_dkr = {

service = "ecr.dkr"

subnet_ids = [data.aws_subnet.eks_private_1.id, data.aws_subnet.eks_private_2.id, data.aws_subnet.eks_private_3.id]

tags = { Name = "ecr-api-vpc-endpoint" }

}

sts = {

service = "sts"

subnet_ids = [data.aws_subnet.eks_private_1.id, data.aws_subnet.eks_private_2.id, data.aws_subnet.eks_private_3.id]

tags = { Name = "sts-vpc-endpoint" }

}

}

}

# efs Create

module "efs" {

source = "terraform-aws-modules/efs/aws"

version = "1.6.3"

# File system

name = "${var.ClusterBaseName}-efs"

encrypted = true

lifecycle_policy = {

transition_to_ia = "AFTER_30_DAYS"

}

# Mount targets

mount_targets = {

"${var.availability_zones[0]}" = {

subnet_id = data.aws_subnet.eks_private_1.id

}

"${var.availability_zones[1]}" = {

subnet_id = data.aws_subnet.eks_private_2.id

}

"${var.availability_zones[2]}" = {

subnet_id = data.aws_subnet.eks_private_3.id

}

}

# security group (allow - tcp 2049)

security_group_description = "EFS security group"

security_group_vpc_id = data.aws_vpc.service_vpc.id

security_group_rules = {

vpc = {

description = "EFS ingress from VPC private subnets"

cidr_blocks = ["172.20.0.0/16"]

}

}

tags = {

Terraform = "true"

Environment = "dev"

}

}

# S3 Bucket Create

resource "aws_s3_bucket" "main" {

bucket = "${var.ClusterBaseName}-${var.MyDomain}"

tags = {

Name = "${var.ClusterBaseName}-s3-bucket"

}

}

- iam.tf

#####################

# Create IAM Policy #

#####################

# AWSLoadBalancerController IAM Policy

resource "aws_iam_policy" "aws_lb_controller_policy" {

name = "${var.ClusterBaseName}AWSLoadBalancerControllerPolicy"

description = "Policy for allowing AWS LoadBalancerController to modify AWS ELB"

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"iam:CreateServiceLinkedRole"

],

"Resource": "*",

"Condition": {

"StringEquals": {

"iam:AWSServiceName": "elasticloadbalancing.amazonaws.com"

}

}

},

{

"Effect": "Allow",

"Action": [

"ec2:DescribeAccountAttributes",

"ec2:DescribeAddresses",

"ec2:DescribeAvailabilityZones",

"ec2:DescribeInternetGateways",

"ec2:DescribeVpcs",

"ec2:DescribeVpcPeeringConnections",

"ec2:DescribeSubnets",

"ec2:DescribeSecurityGroups",

"ec2:DescribeInstances",

"ec2:DescribeNetworkInterfaces",

"ec2:DescribeTags",

"ec2:GetCoipPoolUsage",

"ec2:DescribeCoipPools",

"elasticloadbalancing:DescribeLoadBalancers",

"elasticloadbalancing:DescribeLoadBalancerAttributes",

"elasticloadbalancing:DescribeListeners",

"elasticloadbalancing:DescribeListenerCertificates",

"elasticloadbalancing:DescribeSSLPolicies",

"elasticloadbalancing:DescribeRules",

"elasticloadbalancing:DescribeTargetGroups",

"elasticloadbalancing:DescribeTargetGroupAttributes",

"elasticloadbalancing:DescribeTargetHealth",

"elasticloadbalancing:DescribeTags"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"cognito-idp:DescribeUserPoolClient",

"acm:ListCertificates",

"acm:DescribeCertificate",

"iam:ListServerCertificates",

"iam:GetServerCertificate",

"waf-regional:GetWebACL",

"waf-regional:GetWebACLForResource",

"waf-regional:AssociateWebACL",

"waf-regional:DisassociateWebACL",

"wafv2:GetWebACL",

"wafv2:GetWebACLForResource",

"wafv2:AssociateWebACL",

"wafv2:DisassociateWebACL",

"shield:GetSubscriptionState",

"shield:DescribeProtection",

"shield:CreateProtection",

"shield:DeleteProtection"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"ec2:AuthorizeSecurityGroupIngress",

"ec2:RevokeSecurityGroupIngress"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"ec2:CreateSecurityGroup"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"ec2:CreateTags"

],

"Resource": "arn:aws:ec2:*:*:security-group/*",

"Condition": {

"StringEquals": {

"ec2:CreateAction": "CreateSecurityGroup"

},

"Null": {

"aws:RequestTag/elbv2.k8s.aws/cluster": "false"

}

}

},

{

"Effect": "Allow",

"Action": [

"ec2:CreateTags",

"ec2:DeleteTags"

],

"Resource": "arn:aws:ec2:*:*:security-group/*",

"Condition": {

"Null": {

"aws:RequestTag/elbv2.k8s.aws/cluster": "true",

"aws:ResourceTag/elbv2.k8s.aws/cluster": "false"

}

}

},

{

"Effect": "Allow",

"Action": [

"ec2:AuthorizeSecurityGroupIngress",

"ec2:RevokeSecurityGroupIngress",

"ec2:DeleteSecurityGroup"

],

"Resource": "*",

"Condition": {

"Null": {

"aws:ResourceTag/elbv2.k8s.aws/cluster": "false"

}

}

},

{

"Effect": "Allow",

"Action": [

"elasticloadbalancing:CreateLoadBalancer",

"elasticloadbalancing:CreateTargetGroup"

],

"Resource": "*",

"Condition": {

"Null": {

"aws:RequestTag/elbv2.k8s.aws/cluster": "false"

}

}

},

{

"Effect": "Allow",

"Action": [

"elasticloadbalancing:CreateListener",

"elasticloadbalancing:DeleteListener",

"elasticloadbalancing:CreateRule",

"elasticloadbalancing:DeleteRule"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"elasticloadbalancing:AddTags",

"elasticloadbalancing:RemoveTags"

],

"Resource": [

"arn:aws:elasticloadbalancing:*:*:targetgroup/*/*",

"arn:aws:elasticloadbalancing:*:*:loadbalancer/net/*/*",

"arn:aws:elasticloadbalancing:*:*:loadbalancer/app/*/*"

],

"Condition": {

"Null": {

"aws:RequestTag/elbv2.k8s.aws/cluster": "true",

"aws:ResourceTag/elbv2.k8s.aws/cluster": "false"

}

}

},

{

"Effect": "Allow",

"Action": [

"elasticloadbalancing:AddTags",

"elasticloadbalancing:RemoveTags"

],

"Resource": [

"arn:aws:elasticloadbalancing:*:*:listener/net/*/*/*",

"arn:aws:elasticloadbalancing:*:*:listener/app/*/*/*",

"arn:aws:elasticloadbalancing:*:*:listener-rule/net/*/*/*",

"arn:aws:elasticloadbalancing:*:*:listener-rule/app/*/*/*"

]

},

{

"Effect": "Allow",

"Action": [

"elasticloadbalancing:AddTags"

],

"Resource": [

"arn:aws:elasticloadbalancing:*:*:targetgroup/*/*",

"arn:aws:elasticloadbalancing:*:*:loadbalancer/net/*/*",

"arn:aws:elasticloadbalancing:*:*:loadbalancer/app/*/*"

],

"Condition": {

"StringEquals": {

"elasticloadbalancing:CreateAction": [

"CreateTargetGroup",

"CreateLoadBalancer"

]

},

"Null": {

"aws:RequestTag/elbv2.k8s.aws/cluster": "false"

}

}

},

{

"Effect": "Allow",

"Action": [

"elasticloadbalancing:ModifyLoadBalancerAttributes",

"elasticloadbalancing:SetIpAddressType",

"elasticloadbalancing:SetSecurityGroups",

"elasticloadbalancing:SetSubnets",

"elasticloadbalancing:DeleteLoadBalancer",

"elasticloadbalancing:ModifyTargetGroup",

"elasticloadbalancing:ModifyTargetGroupAttributes",

"elasticloadbalancing:DeleteTargetGroup"

],

"Resource": "*",

"Condition": {

"Null": {

"aws:ResourceTag/elbv2.k8s.aws/cluster": "false"

}

}

},

{

"Effect": "Allow",

"Action": [

"elasticloadbalancing:RegisterTargets",

"elasticloadbalancing:DeregisterTargets"

],

"Resource": "arn:aws:elasticloadbalancing:*:*:targetgroup/*/*"

},

{

"Effect": "Allow",

"Action": [

"elasticloadbalancing:SetWebAcl",

"elasticloadbalancing:ModifyListener",

"elasticloadbalancing:AddListenerCertificates",

"elasticloadbalancing:RemoveListenerCertificates",

"elasticloadbalancing:ModifyRule"

],

"Resource": "*"

}

]

}

EOF

}

# ExternalDNS IAM Policy

resource "aws_iam_policy" "external_dns_policy" {

name = "${var.ClusterBaseName}ExternalDNSPolicy"

description = "Policy for allowing ExternalDNS to modify Route 53 records"

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"route53:ChangeResourceRecordSets"

],

"Resource": [

"arn:aws:route53:::hostedzone/*"

]

},

{

"Effect": "Allow",

"Action": [

"route53:ListHostedZones",

"route53:ListResourceRecordSets"

],

"Resource": [

"*"

]

}

]

}

EOF

}

# Mountpoint S3 CSI Driver IAM Policy

resource "aws_iam_policy" "mountpoint_s3_csi_policy" {

name = "${var.ClusterBaseName}MountpointS3CSIPolicy"

description = "Mountpoint S3 CSI Driver Policy"

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "MountpointFullBucketAccess",

"Effect": "Allow",

"Action": [

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::${var.ClusterBaseName}-${var.MyDomain}"

]

},

{

"Sid": "MountpointFullObjectAccess",

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:PutObject",

"s3:AbortMultipartUpload",

"s3:DeleteObject"

],

"Resource": [

"arn:aws:s3:::${var.ClusterBaseName}-${var.MyDomain}/*"

]

}

]

}

EOF

}

#####################

# Create IRSA Roles #

#####################

# ebs-csi irsa

data "aws_iam_policy" "ebs_csi_policy" {

arn = "arn:aws:iam::aws:policy/service-role/AmazonEBSCSIDriverPolicy"

}

module "irsa-ebs-csi" {

source = "terraform-aws-modules/iam/aws//modules/iam-assumable-role-with-oidc"

version = "5.39.0"

create_role = true

role_name = "AmazonEKSTFEBSCSIRole-${module.eks.cluster_name}"

provider_url = module.eks.oidc_provider

role_policy_arns = [data.aws_iam_policy.ebs_csi_policy.arn]

oidc_fully_qualified_subjects = ["system:serviceaccount:kube-system:ebs-csi-controller-sa"]

}

# efs-csi irsa

data "aws_iam_policy" "efs_csi_policy" {

arn = "arn:aws:iam::aws:policy/service-role/AmazonEFSCSIDriverPolicy"

}

module "irsa-efs-csi" {

source = "terraform-aws-modules/iam/aws//modules/iam-assumable-role-with-oidc"

version = "5.39.0"

create_role = true

role_name = "AmazonEKSTFEFSCSIRole-${module.eks.cluster_name}"

provider_url = module.eks.oidc_provider

role_policy_arns = [data.aws_iam_policy.efs_csi_policy.arn]

oidc_fully_qualified_subjects = ["system:serviceaccount:kube-system:efs-csi-controller-sa"]

}

# s3-csi irsa

module "irsa-s3-csi" {

source = "terraform-aws-modules/iam/aws//modules/iam-assumable-role-with-oidc"

version = "5.39.0"

create_role = true

role_name = "AmazonEKSTFS3CSIRole-${module.eks.cluster_name}"

provider_url = module.eks.oidc_provider

role_policy_arns = [aws_iam_policy.mountpoint_s3_csi_policy.arn]

oidc_fully_qualified_subjects = ["system:serviceaccount:kube-system:s3-csi-driver-sa"]

oidc_fully_qualified_audiences = ["sts.amazonaws.com"]

}

# aws-load-balancer-controller irsa

module "irsa-lb-controller" {

source = "terraform-aws-modules/iam/aws//modules/iam-assumable-role-with-oidc"

version = "5.39.0"

create_role = true

role_name = "AmazonEKSTFLBControllerRole-${module.eks.cluster_name}"

provider_url = module.eks.oidc_provider

role_policy_arns = [aws_iam_policy.aws_lb_controller_policy.arn]

oidc_fully_qualified_subjects = ["system:serviceaccount:kube-system:aws-load-balancer-controller-sa"]

oidc_fully_qualified_audiences = ["sts.amazonaws.com"]

}

# external-dns irsa

module "irsa-external-dns" {

source = "terraform-aws-modules/iam/aws//modules/iam-assumable-role-with-oidc"

version = "5.39.0"

create_role = true

role_name = "AmazonEKSTFExternalDnsRole-${module.eks.cluster_name}"

provider_url = module.eks.oidc_provider

role_policy_arns = [aws_iam_policy.external_dns_policy.arn]

oidc_fully_qualified_subjects = ["system:serviceaccount:kube-system:external-dns-sa"]

oidc_fully_qualified_audiences = ["sts.amazonaws.com"]

}

- out.tf

output "public_ip" {

value = aws_instance.eks_bastion.public_ip

description = "The public IP of the myeks-host EC2 instance."

}

- Terraform 변수 선언 및 배포

# Terraform 환경 변수 저장

export TF_VAR_KeyName=[ssh keypair]

export TF_VAR_MyDomain=[Domain Name]

export TF_VAR_MyIamUserAccessKeyID=[iam 사용자의 access key id]

export TF_VAR_MyIamUserSecretAccessKey=[iam 사용자의 secret access key]

export TF_VAR_SgIngressSshCidr=$(curl -s ipinfo.io/ip)/32

# Terraform 배포

terraform init

terraform plan

....

Plan: 77 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ public_ip = (known after apply)

terraform apply -auto-approve

....

Apply complete! Resources: 77 added, 0 changed, 0 destroyed.

Outputs:

public_ip = "3.39.249.91"

- 기본 설정

# ec2 접속

ssh -i /Users/jeongheepark/.ssh/PJH-aws-test.pem ubuntu@3.39.249.91

# EKS 클러스터 인증 정보 업데이트

aws eks update-kubeconfig --region $AWS_DEFAULT_REGION --name $CLUSTER_NAME

# kubectl 명령을 수행할 네임스페이스 지정

kubens default

# 노드 IP 변수 저장

N1=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2a -o jsonpath={.items[0].status.addresses[0].address})

N2=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2b -o jsonpath={.items[0].status.addresses[0].address})

N3=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2c -o jsonpath={.items[0].status.addresses[0].address})

echo "export N1=$N1" >> /etc/profile

echo "export N2=$N2" >> /etc/profile

echo "export N3=$N3" >> /etc/profile

echo $N1, $N2, $N3

172.20.96.238, 172.20.131.113, 172.20.146.18

# 노드에 ssh 접근 확인

for node in $N1 $N2 $N3; do ssh -i ~/.ssh/kp_node.pem -o StrictHostKeyChecking=no ec2-user@$node hostname; done2. EKS Networking Feature

- AWS LoadBalancer Controller 설치

# Kubernetes SA 생성 및 annotate

kubectl create serviceaccount aws-load-balancer-controller-sa -n kube-system

kubectl annotate serviceaccount aws-load-balancer-controller-sa \

-n kube-system \

eks.amazonaws.com/role-arn=arn:aws:iam::${ACCOUNT_ID}:role/AmazonEKSTFLBControllerRole-${CLUSTER_NAME}

# Kubernetes SA 확인

kubectl get serviceaccounts -n kube-system aws-load-balancer-controller-sa

NAME SECRETS AGE

aws-load-balancer-controller-sa 0 76s

# AWS LoadBalancer Controller 설치

helm repo add eks https://aws.github.io/eks-charts

helm repo update

helm install aws-load-balancer-controller eks/aws-load-balancer-controller -n kube-system \

--set clusterName=$CLUSTER_NAME \

--set serviceAccount.create=false \

--set serviceAccount.name=aws-load-balancer-controller-sa

NAME: aws-load-balancer-controller

LAST DEPLOYED: Wed Feb 12 16:07:13 2025

NAMESPACE: kube-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

AWS Load Balancer controller installed!

# CRD 확인

kubectl get crd | grep elb

--

ingressclassparams.elbv2.k8s.aws 2025-02-12T07:07:11Z

targetgroupbindings.elbv2.k8s.aws 2025-02-12T07:07:11Z

# Deployment 확인(aws-load-balancer-controller)

kubectl get deployment -n kube-system aws-load-balancer-controller

NAME READY UP-TO-DATE AVAILABLE AGE

aws-load-balancer-controller 2/2 2 2 68s

# Pod 확인(aws-load-balancer-controller)

kubectl get pod -n kube-system | grep aws-load-balancer

aws-load-balancer-controller-586f4565b-fzqc4 1/1 Running 0 85s

aws-load-balancer-controller-586f4565b-mk8bt 1/1 Running 0 85s

- Ingress 배포 및 확인

# [모니터링] Pod, Service, Ingress, Endpoint

watch -d kubectl get pod,ingress,svc,ep -n game-2048

NAME READY STATUS RESTARTS AGE

pod/deployment-2048-85f8c7d69-hkjzf 1/1 Running 0 70s

pod/deployment-2048-85f8c7d69-kjpdz 1/1 Running 0 70s

NAME CLASS HOSTS ADDRESS

PORTS AGE

ingress.networking.k8s.io/ingress-2048 alb * k8s-game2048-ingress2-cbd390fc06-311698720.ap-northeast-2.elb.amazonaws

.com 80 70s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/service-2048 NodePort 10.100.235.57 <none> 80:30241/TCP 70s

NAME ENDPOINTS AGE

endpoints/service-2048 172.20.130.173:80,172.20.154.149:80 70s

# [모니터링] elb

watch -d "aws elbv2 describe-load-balancers --output text --query 'LoadBalancers[*].[LoadBalancerName, State.Code]'"

k8s-game2048-ingress2-cbd390fc06 active

# yaml 파일 다운로드 및 배포

curl -s -O https://raw.githubusercontent.com/cloudneta/cnaeblab/master/_data/ingress1.yaml

kubectl apply -f ingress1.yaml

# Target Group Binding 확인

kubectl get targetgroupbindings -n game-2048

NAME SERVICE-NAME SERVICE-PORT TARGET-TYPE AGE

k8s-game2048-service2-e6be746161 service-2048 80 ip 81s

# ingress 정보 확인

kubectl describe ingress -n game-2048

Name: ingress-2048

Labels: <none>

Namespace: game-2048

Address: k8s-game2048-ingress2-cbd390fc06-311698720.ap-northeast-2.elb.amazonaws.com

Ingress Class: alb

Default backend: <default>

Rules:

Host Path Backends

---- ---- --------

*

/ service-2048:80 (172.20.130.173:80,172.20.154.149:80)

Annotations: alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfullyReconciled 41s ingress Successfully reconciled

# 웹 접속 ALB 주소 확인

kubectl get ingress -n game-2048 ingress-2048 -o jsonpath={.status.loadBalancer.ingress[0].hostname} | awk '{ print "2048 Game URL = http://"$1 }'

2048 Game URL = http://k8s-game2048-ingress2-cbd390fc06-311698720.ap-northeast-2.elb.amazonaws.com

- ExternalDNS 설치

# MyDomain 변수 확인

echo $MyDomain

pjhtest.click

# 도메인의 Hosted Zone 정보 확인

aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." | jq

{

"HostedZones": [

{

"Id": "/hostedzone/Z0....",

"Name": "pjhtest.click.",

"CallerReference": "RISWorkflow-RD:074565b6-c2e7-4371-b5f4-843c58717606",

"Config": {

"Comment": "HostedZone created by Route53 Registrar",

"PrivateZone": false

},

"ResourceRecordSetCount": 2

}

],

"DNSName": "pjhtest.click.",

"IsTruncated": false,

"MaxItems": "100"

}

# 도메인 ID 변수 지정

MyDnsHostedZoneId=`aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Id" --output text`

echo "export MyDnsHostedZoneId=$MyDnsHostedZoneId" >> /etc/profile

echo $MyDnsHostedZoneId

/hostedzone/Z0....

# Kubernetes SA 생성 및 annotate

kubectl create serviceaccount external-dns-sa -n kube-system

kubectl annotate serviceaccount external-dns-sa \

-n kube-system \

eks.amazonaws.com/role-arn=arn:aws:iam::${ACCOUNT_ID}:role/AmazonEKSTFExternalDnsRole-${CLUSTER_NAME}

# Kubernetes SA 확인

kubectl get serviceaccounts -n kube-system external-dns-sa

NAME SECRETS AGE

external-dns-sa 0 21s

# ExternalDNS 설치

helm repo add external-dns https://kubernetes-sigs.github.io/external-dns/

helm repo update

helm install external-dns external-dns/external-dns -n kube-system \

--set serviceAccount.create=false \

--set serviceAccount.name=external-dns-sa \

--set domainFilters={${MyDomain}} \

--set txtOwnerId=${MyDnsHostedZoneId}

NAME: external-dns

LAST DEPLOYED: Thu Feb 13 13:58:37 2025

NAMESPACE: kube-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

***********************************************************************

* External DNS *

***********************************************************************

Chart version: 1.15.1

App version: 0.15.1

Image tag: registry.k8s.io/external-dns/external-dns:v0.15.1

***********************************************************************

# [모니터링] Hosted-Zone A 레코드 확인

while true; do aws route53 list-resource-record-sets \

--hosted-zone-id "${MyDnsHostedZoneId}" \

--query "ResourceRecordSets[?Type == 'A'].Name" \

--output text ; date ; echo ; sleep 1; done

--

...

ingress.pjhtest.click.

Thu Feb 13 14:01:04 KST 2025

# ingress annotate (external-dns)

kubectl annotate ingress ingress-2048 -n game-2048 "external-dns.alpha.kubernetes.io/hostname=ingress.$MyDomain"

echo -e "2048 Game URL = http://ingress.$MyDomain"

2048 Game URL = http://ingress.pjhtest.click

- 실습 자원 삭제

# 실습 자원 삭제 (Deployment, Ingress, Namespace)

kubectl delete -f ingress1.yaml

- Amazon VPC CNI with Network Policy

# EKS 클러스터 버전 확인

aws eks describe-cluster --name $CLUSTER_NAME --query cluster.version --output text

1.30

# VPC CNI 버전 확인

kubectl describe daemonset aws-node --namespace kube-system | grep amazon-k8s-cni: | cut -d : -f 3

v1.19.2-eksbuild.5

# 워커 노드의 OS 커널 버전 확인

ssh -i ~/.ssh/kp_node.pem ec2-user@$N1 uname -r

5.10.233-223.887.amzn2.x86_64

# VPC CNI - Network Policy 기능 확인

kubectl get ds aws-node -n kube-system -o yaml | grep enable-network-policy

--

- --enable-network-policy=true

# 각 노드에 BPF 파일 시스템을 탑재 확인

ssh -i ~/.ssh/kp_node.pem ec2-user@$N1 mount | grep -i bpf

--

none on /sys/fs/bpf type bpf (rw,nosuid,nodev,noexec,relatime,mode=700)

ssh -i ~/.ssh/kp_node.pem ec2-user@$N1 df -a | grep -i bpf

--

none 0 0 0 - /sys/fs/bpf

# aws-node에 컨테이너 이미지 확인

kubectl get ds aws-node -n kube-system -o yaml | grep -i image:

image: 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/amazon-k8s-cni:v1.19.2-eksbuild.5

image: 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/amazon/aws-network-policy-agent:v1.1.6-eksbuild.2

image: 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/amazon-k8s-cni-init:v1.19.2-eksbuild.5

# k8s api 확인 (Network Policy Endpoint)

kubectl api-resources | grep -i policyendpoints

--

policyendpoints networking.k8s.aws/v1alpha1 true PolicyEndpoint

# CRD 확인 (Network Policy Endpoint)

kubectl get crd

NAME CREATED AT

cninodes.vpcresources.k8s.aws 2025-02-13T04:34:51Z

dnsendpoints.externaldns.k8s.io 2025-02-13T04:58:35Z

eniconfigs.crd.k8s.amazonaws.com 2025-02-13T04:36:57Z

ingressclassparams.elbv2.k8s.aws 2025-02-13T04:51:42Z

policyendpoints.networking.k8s.aws 2025-02-13T04:34:51Z

securitygrouppolicies.vpcresources.k8s.aws 2025-02-13T04:34:51Z

targetgroupbindings.elbv2.k8s.aws 2025-02-13T04:51:42Z

volumegroupsnapshotclasses.groupsnapshot.storage.k8s.io 2025-02-13T04:42:53Z

volumegroupsnapshotcontents.groupsnapshot.storage.k8s.io 2025-02-13T04:42:53Z

volumegroupsnapshots.groupsnapshot.storage.k8s.io 2025-02-13T04:42:53Z

volumesnapshotclasses.snapshot.storage.k8s.io 2025-02-13T04:42:53Z

volumesnapshotcontents.snapshot.storage.k8s.io 2025-02-13T04:42:53Z

volumesnapshots.snapshot.storage.k8s.io 2025-02-13T04:42:53Z

# 실행 중인 eBPF 프로그램 및 데이터 확인

ssh -i ~/.ssh/kp_node.pem ec2-user@$N1 sudo /opt/cni/bin/aws-eks-na-cli ebpf progs

--

Programs currently loaded :

Type : 26 ID : 6 Associated maps count : 1

========================================================================================

Type : 26 ID : 8 Associated maps count : 1

========================================================================================

ssh -i ~/.ssh/kp_node.pem ec2-user@$N1 sudo /opt/cni/bin/aws-eks-na-cli ebpf loaded-ebpfdata

--

none

# 노드 보안 그룹 ID 확인

aws ec2 describe-security-groups --filters Name=group-name,Values=${CLUSTER_NAME}-node-group-sg --query "SecurityGroups[*].[GroupId]" --output text

NGSGID=$(aws ec2 describe-security-groups --filters Name=group-name,Values=${CLUSTER_NAME}-node-group-sg --query "SecurityGroups[*].[GroupId]" --output text); echo $NGSGID

echo "export NGSGID=$NGSGID" >> /etc/profile

# 노드 보안 그룹에 VPC 대역 모두 허용

aws ec2 authorize-security-group-ingress --group-id $NGSGID --protocol tcp --port 80 --cidr 172.20.0.0/16- 데모 애플리케이션 배포 후 테스트

# VPC CNI - Network Policy 실습 파일 다운로드 및 압축 해제

wget https://github.com/cloudneta/cnaeelab/raw/master/_data/demo_ch2_1.zip

unzip demo_ch2_1.zip

# 대상 경로 진입 및 확인

cd demo_ch2_1

tree

--

.

├── manifest

│ ├── 01_ns.yaml

│ ├── 02_demo_web.yaml

│ ├── 03_red_pod.yaml

│ └── 04_blue_pod.yaml

└── netpolicy

├── 01_deny_all_ingress.yaml

├── 02_allow_redns_red1_ingress.yaml

├── 03_allow_redns_ingress.yaml

├── 04_allow_bluens_blue2_ingress.yaml

├── 05_deny_redns_red1_ingress.yaml

├── 06_deny_all_egress_from_red1.yaml

└── 07_allow_dns_egress_from_red1.yaml

# 데모 애플리케이션 배포

kubectl apply -f manifest/

# 생성 자원 확인

kubectl get pod,svc -n red

NAME READY STATUS RESTARTS AGE

pod/demo-web-57bb56f5c9-z8hml 1/1 Running 0 20s

pod/red-1 1/1 Running 0 20s

pod/red-2 1/1 Running 0 20s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/demo-web ClusterIP 10.100.183.8 <none> 80/TCP 20s

kubectl get pod,svc -n blue

NAME READY STATUS RESTARTS AGE

pod/blue-1 1/1 Running 0 24s

pod/blue-2 1/1 Running 0 24s

# demo-web으로 통신 확인 (모두 통신 가능)

kubectl exec -it red-1 -n red -- curl demo-web

kubectl exec -it red-2 -n red -- curl demo-web

kubectl exec -it blue-1 -n blue -- curl demo-web.red

kubectl exec -it blue-2 -n blue -- curl demo-web.red- 01~07까지 테스트진행(내용이 방대해서 정리 SKIP)

진행시마다 아래명령어로 확인

# eBPF 데이터 확인

for i in $N1 $N2 $N3; \

do echo ">> node $i <<"; \

ssh -i ~/.ssh/kp_node.pem ec2-user@$i sudo /opt/cni/bin/aws-eks-na-cli ebpf eBPF 맵 정보를 출력하는 서브 커맨드(옵션); \

echo; done3. EKS Storage Feature

- Amazon EBS CSI Driver - EXT4

# ebs-csi-driver add-on 정보 확인

eksctl get addon --cluster $CLUSTER_NAME | grep ebs

aws-ebs-csi-driver v1.39.0-eksbuild.1 ACTIVE 0 arn:aws:iam::20....:role/AmazonEKSTFEBSCSIRole-pjh-dev-eks

# ebs-csi-controller 컨테이너 확인

kubectl get pod -n kube-system -l app=ebs-csi-controller -o jsonpath='{.items[0].spec.containers[*].name}' ; echo

ebs-plugin csi-provisioner csi-attacher csi-snapshotter csi-resizer liveness-probe

# ebs-csi-node 컨테이너 확인

kubectl get daemonset -n kube-system -l app.kubernetes.io/name=aws-ebs-csi-driver -o jsonpath='{.items[0].spec.template.spec.containers[*].name}' ; echo

ebs-plugin node-driver-registrar liveness-probe

# csi 구성되는 노드 확인

kubectl get csinodes

NAME DRIVERS AGE

ip-172-20-139-111.ap-northeast-2.compute.internal 3 4m19s

ip-172-20-151-176.ap-northeast-2.compute.internal 3 4m18s

ip-172-20-98-238.ap-northeast-2.compute.internal 3 4m18s

# [모니터링1] pod, pv, pvc, sc 모니터링

watch -d kubectl get pod,pv,pvc,sc

NAME READY STATUS RESTARTS AGE

pod/ebs-dp-app 1/1 Running 0 2m1s

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAG

ECLASS VOLUMEATTRIBUTESCLASS REASON AGE

persistentvolume/pvc-14a7252c-12be-440d-88f4-eb441fd021a9 4Gi RWO Delete Bound default/ebs-dp-claim ebs-dp

-sc <unset> 118s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRI

BUTESCLASS AGE

persistentvolumeclaim/ebs-dp-claim Bound pvc-14a7252c-12be-440d-88f4-eb441fd021a9 4Gi RWO ebs-dp-sc <unset>

2m55s

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

storageclass.storage.k8s.io/ebs-dp-sc ebs.csi.aws.com Delete WaitForFirstConsumer true 4m1s

storageclass.storage.k8s.io/gp2 kubernetes.io/aws-ebs Delete WaitForFirstConsumer false 15m

# [모니터링2] 동적 프로비저닝으로 생성되는 EBS 볼륨 확인

while true; do aws ec2 describe-volumes \

--filters Name=tag:ebs.csi.aws.com/cluster,Values=true \

--query "Volumes[].{VolumeId: VolumeId, VolumeType: VolumeType, InstanceId: Attachments[0].InstanceId, State: Attachments[0].State}" \

--output text; date; sleep 1; done

Fri Feb 14 14:01:47 KST 2025

i-020e82300bedc842d attached vol-0bbdd7d99a6b5170b gp3

Fri Feb 14 14:01:49 KST 2025

i-020e82300bedc842d attached vol-0bbdd7d99a6b5170b gp3

# StorageClass 생성

curl -s -O https://raw.githubusercontent.com/cloudneta/cnaeblab/master/_data/ebs_dp_sc.yaml

cat ebs_dp_sc.yaml | yh

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: ebs-dp-sc

allowVolumeExpansion: true

provisioner: ebs.csi.aws.com

volumeBindingMode: WaitForFirstConsumer

parameters:

type: gp3

allowAutoIOPSPerGBIncrease: 'true'

encrypted: 'true'

kubectl apply -f ebs_dp_sc.yaml

# StorageClass 확인

kubectl describe sc ebs-dp-sc

Name: ebs-dp-sc

IsDefaultClass: No

Annotations: kubectl.kubernetes.io/last-applied-configuration={"allowVolumeExpansion":true,"apiVersion":"storage.k8s.io/v1","kind":"StorageClass","metadata":{"annotations":{},"name":"ebs-dp-sc"},"parameters":{"allowAutoIOPSPerGBIncrease":"true","encrypted":"true","type":"gp3"},"provisioner":"ebs.csi.aws.com","volumeBindingMode":"WaitForFirstConsumer"}

Provisioner: ebs.csi.aws.com

Parameters: allowAutoIOPSPerGBIncrease=true,encrypted=true,type=gp3

AllowVolumeExpansion: True

MountOptions: <none>

ReclaimPolicy: Delete

VolumeBindingMode: WaitForFirstConsumer

Events: <none>

# PVC 생성

curl -s -O https://raw.githubusercontent.com/cloudneta/cnaeblab/master/_data/ebs_dp_pvc.yaml

cat ebs_dp_pvc.yaml | yh

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: ebs-dp-claim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 4Gi

storageClassName: ebs-dp-sc

kubectl apply -f ebs_dp_pvc.yaml

# PVC 확인

kubectl describe pvc ebs-dp-claim

Name: ebs-dp-claim

Namespace: default

StorageClass: ebs-dp-sc

Status: Pending

Volume:

Labels: <none>

Annotations: <none>

Finalizers: [kubernetes.io/pvc-protection]

Capacity:

Access Modes:

VolumeMode: Filesystem

Used By: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal WaitForFirstConsumer 6s persistentvolume-controller waiting for first consumer to be created before binding

# Pod 생성

curl -s -O https://raw.githubusercontent.com/cloudneta/cnaeblab/master/_data/ebs_dp_pod.yaml

cat ebs_dp_pod.yaml | yh

apiVersion: v1

kind: Pod

metadata:

name: ebs-dp-app

spec:

terminationGracePeriodSeconds: 3

containers:

- name: app

image: centos

command: ["/bin/sh"]

args: ["-c", "while true; do echo $(date -u) >> /data/out.txt; sleep 10; done"]

volumeMounts:

- name: persistent-storage

mountPath: /data

volumes:

- name: persistent-storage

persistentVolumeClaim:

claimName: ebs-dp-claim

kubectl apply -f ebs_dp_pod.yaml

# Pod 확인

kubectl describe pod ebs-dp-app | awk '/Volumes/{x=NR+4}(NR<=x){print}'

Volumes:

persistent-storage:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: ebs-dp-claim

ReadOnly: false# ebs-csi-controller의 provisioner log 확인

kubectl logs -l app=ebs-csi-controller -c csi-provisioner -n kube-system

I0214 04:53:17.283285 1 reflector.go:368] Caches populated for *v1.StorageClass from k8s.io/client-go@v0.31.0/tools/cache/reflector.go:243

I0214 04:53:17.288122 1 reflector.go:368] Caches populated for *v1.Node from k8s.io/client-go@v0.31.0/tools/cache/reflector.go:243

I0214 04:53:17.363876 1 controller.go:824] "Starting provisioner controller" component="ebs.csi.aws.com_ebs-csi-controller-764674f9bb-cw6wr_35d32b69-cf92-4a24-baf1-88af066ebb7b"

I0214 04:53:17.363934 1 volume_store.go:98] "Starting save volume queue"

I0214 04:53:17.370019 1 reflector.go:368] Caches populated for *v1.StorageClass from k8s.io/client-go@v0.31.0/tools/cache/reflector.go:243

I0214 04:53:17.370482 1 reflector.go:368] Caches populated for *v1.PersistentVolume from k8s.io/client-go@v0.31.0/tools/cache/reflector.go:243

I0214 04:53:17.464389 1 controller.go:873] "Started provisioner controller" component="ebs.csi.aws.com_ebs-csi-controller-764674f9bb-cw6wr_35d32b69-cf92-4a24-baf1-88af066ebb7b"

I0214 04:59:42.739404 1 event.go:389] "Event occurred" object="default/ebs-dp-claim" fieldPath="" kind="PersistentVolumeClaim" apiVersion="v1" type="Normal" reason="Provisioning" message="External provisioner is provisioning volume for claim \"default/ebs-dp-claim\""

I0214 04:59:44.995396 1 controller.go:955] successfully created PV pvc-14a7252c-12be-440d-88f4-eb441fd021a9 for PVC ebs-dp-claim and csi volume name vol-0bbdd7d99a6b5170b

I0214 04:59:45.010380 1 event.go:389] "Event occurred" object="default/ebs-dp-claim" fieldPath="" kind="PersistentVolumeClaim" apiVersion="v1" type="Normal" reason="ProvisioningSucceeded" message="Successfully provisioned volume pvc-14a7252c-12be-440d-88f4-eb441fd021a9"

I0214 04:53:25.502075 1 feature_gate.go:387] feature gates: {map[Topology:true]}

I0214 04:53:25.502099 1 csi-provisioner.go:154] Version: v5.1.0

I0214 04:53:25.502111 1 csi-provisioner.go:177] Building kube configs for running in cluster...

I0214 04:53:25.504014 1 common.go:143] "Probing CSI driver for readiness"

I0214 04:53:25.526475 1 csi-provisioner.go:230] Detected CSI driver ebs.csi.aws.com

I0214 04:53:25.526502 1 csi-provisioner.go:240] Supports migration from in-tree plugin: kubernetes.io/aws-ebs

I0214 04:53:25.527407 1 common.go:143] "Probing CSI driver for readiness"

I0214 04:53:25.528968 1 csi-provisioner.go:299] CSI driver supports PUBLISH_UNPUBLISH_VOLUME, watching VolumeAttachments

I0214 04:53:25.529634 1 controller.go:744] "Using saving PVs to API server in background"

I0214 04:53:25.530009 1 leaderelection.go:254] attempting to acquire leader lease kube-system/ebs-csi-aws-com...

# PV 확인

kubectl describe pv | yh

Name: pvc-14a7252c-12be-440d-88f4-eb441fd021a9

Labels: <none>

Annotations: pv.kubernetes.io/provisioned-by: ebs.csi.aws.com

volume.kubernetes.io/provisioner-deletion-secret-name:

volume.kubernetes.io/provisioner-deletion-secret-namespace:

Finalizers: [external-provisioner.volume.kubernetes.io/finalizer kubernetes.io/pv-protection external-attacher/ebs-csi-aws-com]

StorageClass: ebs-dp-sc

Status: Bound

Claim: default/ebs-dp-claim

Reclaim Policy: Delete

Access Modes: RWO

VolumeMode: Filesystem

Capacity: 4Gi

Node Affinity:

Required Terms:

Term 0: topology.kubernetes.io/zone in [ap-northeast-2b]

Message:

Source:

Type: CSI (a Container Storage Interface (CSI) volume source)

Driver: ebs.csi.aws.com

FSType: ext4

VolumeHandle: vol-0bbdd7d99a6b5170b

ReadOnly: false

VolumeAttributes: storage.kubernetes.io/csiProvisionerIdentity=1739508797221-9418-ebs.csi.aws.com

Events: <none>

# ebs-csi-controller의 attacher log 확인

kubectl logs -l app=ebs-csi-controller -c csi-attacher -n kube-system

I0214 04:53:20.475892 1 main.go:251] "CSI driver supports ControllerPublishUnpublish, using real CSI handler" driver="ebs.csi.aws.com"

I0214 04:53:20.476488 1 leaderelection.go:257] attempting to acquire leader lease kube-system/external-attacher-leader-ebs-csi-aws-com...

I0214 04:53:20.506348 1 leaderelection.go:271] successfully acquired lease kube-system/external-attacher-leader-ebs-csi-aws-com

I0214 04:53:20.506856 1 leader_election.go:184] "became leader, starting"

I0214 04:53:20.506905 1 controller.go:129] "Starting CSI attacher"

I0214 04:53:20.510114 1 reflector.go:376] Caches populated for *v1.CSINode from k8s.io/client-go@v0.32.0/tools/cache/reflector.go:251

I0214 04:53:20.510346 1 reflector.go:376] Caches populated for *v1.PersistentVolume from k8s.io/client-go@v0.32.0/tools/cache/reflector.go:251

I0214 04:53:20.510575 1 reflector.go:376] Caches populated for *v1.VolumeAttachment from k8s.io/client-go@v0.32.0/tools/cache/reflector.go:251

I0214 04:59:45.871488 1 csi_handler.go:261] "Attaching" VolumeAttachment="csi-68aac92433ad13b231b31f3d72c9cf107e5eaa40103657c2afb428240b8523d6"

I0214 04:59:47.579765 1 csi_handler.go:273] "Attached" VolumeAttachment="csi-68aac92433ad13b231b31f3d72c9cf107e5eaa40103657c2afb428240b8523d6"

I0214 04:53:26.833761 1 main.go:110] "Version" version="v4.8.0"

I0214 04:53:26.834020 1 envvar.go:172] "Feature gate default state" feature="WatchListClient" enabled=false

I0214 04:53:26.834043 1 envvar.go:172] "Feature gate default state" feature="ClientsAllowCBOR" enabled=false

I0214 04:53:26.834052 1 envvar.go:172] "Feature gate default state" feature="ClientsPreferCBOR" enabled=false

I0214 04:53:26.834060 1 envvar.go:172] "Feature gate default state" feature="InformerResourceVersion" enabled=false

I0214 04:53:26.835875 1 common.go:143] "Probing CSI driver for readiness"

I0214 04:53:26.837846 1 main.go:171] "CSI driver name" driver="ebs.csi.aws.com"

I0214 04:53:26.839121 1 common.go:143] "Probing CSI driver for readiness"

I0214 04:53:26.840547 1 main.go:251] "CSI driver supports ControllerPublishUnpublish, using real CSI handler" driver="ebs.csi.aws.com"

I0214 04:53:26.843814 1 leaderelection.go:257] attempting to acquire leader lease kube-system/external-attacher-leader-ebs-csi-aws-com...

# VolumeAttachment 확인

kubectl get VolumeAttachment

NAME ATTACHER PV NODE ATTACHED AGE

csi-68aac92433ad13b231b31f3d72c9cf107e5eaa40103657c2afb428240b8523d6 ebs.csi.aws.com pvc-14a7252c-12be-440d-88f4-eb441fd021a9 ip-172-20-139-111.ap-northeast-2.compute.internal true 4m51s

# 파드에 구성된 마운트 디스크 확인

kubectl exec -it ebs-dp-app -- sh -c 'df -hT --type=ext4'

Filesystem Type Size Used Avail Use% Mounted on

/dev/nvme1n1 ext4 3.9G 28K 3.9G 1% /data

# 파드에서 주기적으로 데이터 저장 확인

kubectl exec ebs-dp-app -- tail -f /data/out.txt

Fri Feb 14 05:03:49 UTC 2025

Fri Feb 14 05:03:59 UTC 2025

Fri Feb 14 05:04:09 UTC 2025

Fri Feb 14 05:04:19 UTC 2025

Fri Feb 14 05:04:29 UTC 2025

Fri Feb 14 05:04:39 UTC 2025

Fri Feb 14 05:04:49 UTC 2025

Fri Feb 14 05:04:59 UTC 2025

Fri Feb 14 05:05:09 UTC 2025

Fri Feb 14 05:05:19 UTC 2025

# 컨테이너 프로세스 재시작 후 확인

kubectl exec ebs-dp-app -c app -- kill -s SIGINT 1

kubectl exec ebs-dp-app -- tail -f /data/out.txt

Fri Feb 14 05:04:09 UTC 2025

Fri Feb 14 05:04:19 UTC 2025

Fri Feb 14 05:04:29 UTC 2025

Fri Feb 14 05:04:39 UTC 2025

Fri Feb 14 05:04:49 UTC 2025

Fri Feb 14 05:04:59 UTC 2025

Fri Feb 14 05:05:09 UTC 2025

Fri Feb 14 05:05:19 UTC 2025

Fri Feb 14 05:05:29 UTC 2025

Fri Feb 14 05:05:39 UTC 2025

Fri Feb 14 05:05:49 UTC 2025

Fri Feb 14 05:05:59 UTC 2025

# 파드 재생성 후 확인

kubectl delete pod ebs-dp-app

kubectl apply -f ebs_dp_pod.yaml

kubectl exec ebs-dp-app -- head /data/out.txt

Fri Feb 14 04:59:59 UTC 2025

Fri Feb 14 05:00:09 UTC 2025

Fri Feb 14 05:00:19 UTC 2025

Fri Feb 14 05:00:29 UTC 2025

Fri Feb 14 05:00:39 UTC 2025

Fri Feb 14 05:00:49 UTC 2025

Fri Feb 14 05:00:59 UTC 2025

Fri Feb 14 05:01:09 UTC 2025

Fri Feb 14 05:01:19 UTC 2025

Fri Feb 14 05:01:29 UTC 2025

kubectl exec ebs-dp-app -- tail -f /data/out.txt

Fri Feb 14 05:05:09 UTC 2025

Fri Feb 14 05:05:19 UTC 2025

Fri Feb 14 05:05:29 UTC 2025

Fri Feb 14 05:05:39 UTC 2025

Fri Feb 14 05:05:49 UTC 2025

Fri Feb 14 05:05:59 UTC 2025

Fri Feb 14 05:06:09 UTC 2025

Fri Feb 14 05:06:19 UTC 2025

Fri Feb 14 05:06:45 UTC 2025

Fri Feb 14 05:06:55 UTC 2025

Fri Feb 14 05:07:05 UTC 2025

# 파드에 구성된 마운트 디스크 사이즈 확인(4G)

kubectl get pvc ebs-dp-claim -o jsonpath={.status.capacity.storage} ; echo

4Gi

kubectl exec -it ebs-dp-app -- sh -c 'df -hT --type=ext4'

Filesystem Type Size Used Avail Use% Mounted on

/dev/nvme1n1 ext4 3.9G 28K 3.9G 1% /data

# pvc에 정의된 스토리지 용량을 증가 (4Gi -> 10Gi)

kubectl patch pvc ebs-dp-claim -p '{"spec":{"resources":{"requests":{"storage":"10Gi"}}}}'

persistentvolumeclaim/ebs-dp-claim patched

# 파드에 구성된 마운트 디스크 사이즈 확인(4G)

kubectl get pvc ebs-dp-claim -o jsonpath={.status.capacity.storage} ; echo

10Gi

kubectl exec -it ebs-dp-app -- sh -c 'df -hT --type=ext4'

Filesystem Type Size Used Avail Use% Mounted on

/dev/nvme1n1 ext4 9.8G 28K 9.8G 1% /data

# ebs-csi-controller의 resizer log 확인

kubectl logs -l app=ebs-csi-controller -c csi-resizer -n kube-system

I0214 04:53:23.028405 1 leaderelection.go:254] attempting to acquire leader lease kube-system/external-resizer-ebs-csi-aws-com...

I0214 04:53:23.068062 1 leaderelection.go:268] successfully acquired lease kube-system/external-resizer-ebs-csi-aws-com

I0214 04:53:23.069181 1 leader_election.go:184] "became leader, starting"

I0214 04:53:23.069230 1 controller.go:272] "Starting external resizer" controller="ebs.csi.aws.com"

I0214 04:53:23.069394 1 envvar.go:172] "Feature gate default state" feature="WatchListClient" enabled=false

I0214 04:53:23.069452 1 envvar.go:172] "Feature gate default state" feature="InformerResourceVersion" enabled=false

I0214 04:53:23.073774 1 reflector.go:368] Caches populated for *v1.PersistentVolumeClaim from k8s.io/client-go@v0.31.0/tools/cache/reflector.go:243

I0214 04:53:23.075243 1 reflector.go:368] Caches populated for *v1.PersistentVolume from k8s.io/client-go@v0.31.0/tools/cache/reflector.go:243

I0214 05:08:54.811913 1 event.go:389] "Event occurred" object="default/ebs-dp-claim" fieldPath="" kind="PersistentVolumeClaim" apiVersion="v1" type="Normal" reason="Resizing" message="External resizer is resizing volume pvc-14a7252c-12be-440d-88f4-eb441fd021a9"

I0214 05:09:00.392067 1 event.go:389] "Event occurred" object="default/ebs-dp-claim" fieldPath="" kind="PersistentVolumeClaim" apiVersion="v1" type="Normal" reason="FileSystemResizeRequired" message="Require file system resize of volume on node"

I0214 04:53:29.031265 1 main.go:108] "Version" version="v1.12.0"

I0214 04:53:29.031327 1 feature_gate.go:387] feature gates: {map[]}

I0214 04:53:29.035230 1 common.go:143] "Probing CSI driver for readiness"

I0214 04:53:29.038893 1 main.go:162] "CSI driver name" driverName="ebs.csi.aws.com"

I0214 04:53:29.042940 1 common.go:143] "Probing CSI driver for readiness"

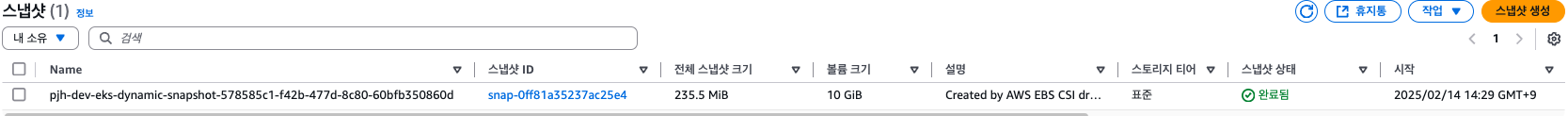

I0214 04:53:29.046266 1 leaderelection.go:254] attempting to acquire leader lease kube-system/external-resizer-ebs-csi-aws-com...- Snapshot Controller

# snapshot controller crd 확인

kubectl get crd | grep snapshot

volumegroupsnapshotclasses.groupsnapshot.storage.k8s.io 2025-02-14T04:53:07Z

volumegroupsnapshotcontents.groupsnapshot.storage.k8s.io 2025-02-14T04:53:07Z

volumegroupsnapshots.groupsnapshot.storage.k8s.io 2025-02-14T04:53:07Z

volumesnapshotclasses.snapshot.storage.k8s.io 2025-02-14T04:53:07Z

volumesnapshotcontents.snapshot.storage.k8s.io 2025-02-14T04:53:07Z

volumesnapshots.snapshot.storage.k8s.io 2025-02-14T04:53:08Z

# snapshot controller 생성 확인

kubectl get deploy -n kube-system snapshot-controller

NAME READY UP-TO-DATE AVAILABLE AGE

snapshot-controller 2/2 2 2 35m

kubectl get pod -n kube-system -l app=snapshot-controller

NAME READY STATUS RESTARTS AGE

snapshot-controller-786b8b8fb4-44fds 1/1 Running 0 35m

snapshot-controller-786b8b8fb4-5cfwx 1/1 Running 0 35m

# VolmeSnapshotClass 생성

curl -s -O https://raw.githubusercontent.com/kubernetes-sigs/aws-ebs-csi-driver/master/examples/kubernetes/snapshot/manifests/classes/snapshotclass.yaml

cat snapshotclass.yaml | yh

apiVersion: snapshot.storage.k8s.io/v1

kind: VolumeSnapshotClass

metadata:

name: csi-aws-vsc

driver: ebs.csi.aws.com

deletionPolicy: Delete

kubectl apply -f snapshotclass.yaml

# VolmeSnapshotClass 확인

kubectl get vsclass

NAME DRIVER DELETIONPOLICY AGE

csi-aws-vsc ebs.csi.aws.com Delete 8s

# VolumeSnapshot 생성

curl -s -O https://raw.githubusercontent.com/cloudneta/cnaeblab/master/_data/ebs_vol_snapshot.yaml

cat ebs_vol_snapshot.yaml | yh

apiVersion: snapshot.storage.k8s.io/v1

kind: VolumeSnapshot

metadata:

name: ebs-volume-snapshot

spec:

volumeSnapshotClassName: csi-aws-vsc

source:

persistentVolumeClaimName: ebs-dp-claim

kubectl apply -f ebs_vol_snapshot.yaml

# VolumeSnapshot 확인

kubectl get volumesnapshot

NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE

ebs-volume-snapshot false ebs-dp-claim 10Gi csi-aws-vsc snapcontent-578585c1-f42b-477d-8c80-60bfb350860d 6s 7s

kubectl get volumesnapshotcontents

NAME READYTOUSE RESTORESIZE DELETIONPOLICY DRIVER VOLUMESNAPSHOTCLASS VOLUMESNAPSHOT VOLUMESNAPSHOTNAMESPACE AGE

snapcontent-578585c1-f42b-477d-8c80-60bfb350860d false 10737418240 Delete ebs.csi.aws.com csi-aws-vsc ebs-volume-snapshot default 19s

# Pod와 PVC 삭제

kubectl delete pod ebs-dp-app && kubectl delete pvc ebs-dp-claim

# 스냅샷 복원용 PVC 생성

curl -s -O https://raw.githubusercontent.com/cloudneta/cnaeblab/master/_data/ebs_snapshot_restore_pvc.yaml

sed -i 's/4Gi/10Gi/g' ebs_snapshot_restore_pvc.yaml

cat ebs_snapshot_restore_pvc.yaml | yh

--

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: ebs-snapshot-restored-claim

spec:

storageClassName: ebs-dp-sc

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

dataSource:

name: ebs-volume-snapshot

kind: VolumeSnapshot

apiGroup: snapshot.storage.k8s.io

kubectl apply -f ebs_snapshot_restore_pvc.yaml

# 스냅샷 복원용 Pod 생성

curl -s -O https://raw.githubusercontent.com/cloudneta/cnaeblab/master/_data/ebs_snapshot_restore_pod.yaml

cat ebs_snapshot_restore_pod.yaml | grep claimName

--

claimName: ebs-snapshot-restored-claim

kubectl apply -f ebs_snapshot_restore_pod.yaml

# Pod 데이터 확인

kubectl exec ebs-dp-app -- head /data/out.txt

Fri Feb 14 04:59:59 UTC 2025

Fri Feb 14 05:00:09 UTC 2025

Fri Feb 14 05:00:19 UTC 2025

Fri Feb 14 05:00:29 UTC 2025

Fri Feb 14 05:00:39 UTC 2025

Fri Feb 14 05:00:49 UTC 2025

Fri Feb 14 05:00:59 UTC 2025

Fri Feb 14 05:01:09 UTC 2025

Fri Feb 14 05:01:19 UTC 2025

Fri Feb 14 05:01:29 UTC 2025

kubectl exec ebs-dp-app -- tail -f /data/out.txt

Fri Feb 14 05:28:35 UTC 2025

Fri Feb 14 05:28:45 UTC 2025

Fri Feb 14 05:28:55 UTC 2025

Fri Feb 14 05:29:05 UTC 2025

Fri Feb 14 05:29:15 UTC 2025

Fri Feb 14 05:29:25 UTC 2025

Fri Feb 14 05:29:35 UTC 2025

Fri Feb 14 05:29:45 UTC 2025

Fri Feb 14 05:35:16 UTC 2025

Fri Feb 14 05:35:26 UTC 2025

# ebs-csi-controller의 resizer log 확인

kubectl logs -l app=snapshot-controller -c snapshot-controller -n kube-system

I0214 04:53:15.936367 1 main.go:169] Version: v8.2.0

I0214 04:53:15.981149 1 leaderelection.go:254] attempting to acquire leader lease kube-system/snapshot-controller-leader...

I0214 04:53:16.011041 1 leaderelection.go:268] successfully acquired lease kube-system/snapshot-controller-leader

I0214 04:53:16.012846 1 snapshot_controller_base.go:255] Starting snapshot controller

I0214 05:29:56.548519 1 snapshot_controller.go:723] createSnapshotContent: Creating content for snapshot default/ebs-volume-snapshot through the plugin ...

I0214 05:29:56.574549 1 snapshot_controller.go:1004] Added protection finalizer to persistent volume claim default/ebs-dp-claim

I0214 05:29:56.600103 1 event.go:377] Event(v1.ObjectReference{Kind:"VolumeSnapshot", Namespace:"default", Name:"ebs-volume-snapshot", UID:"578585c1-f42b-477d-8c80-60bfb350860d", APIVersion:"snapshot.storage.k8s.io/v1", ResourceVersion:"12484", FieldPath:""}): type: 'Normal' reason: 'CreatingSnapshot' Waiting for a snapshot default/ebs-volume-snapshot to be created by the CSI driver.

I0214 05:29:57.604392 1 event.go:377] Event(v1.ObjectReference{Kind:"VolumeSnapshot", Namespace:"default", Name:"ebs-volume-snapshot", UID:"578585c1-f42b-477d-8c80-60bfb350860d", APIVersion:"snapshot.storage.k8s.io/v1", ResourceVersion:"12494", FieldPath:""}): type: 'Normal' reason: 'SnapshotCreated' Snapshot default/ebs-volume-snapshot was successfully created by the CSI driver.

I0214 05:30:31.640417 1 event.go:377] Event(v1.ObjectReference{Kind:"VolumeSnapshot", Namespace:"default", Name:"ebs-volume-snapshot", UID:"578585c1-f42b-477d-8c80-60bfb350860d", APIVersion:"snapshot.storage.k8s.io/v1", ResourceVersion:"12502", FieldPath:""}): type: 'Normal' reason: 'SnapshotReady' Snapshot default/ebs-volume-snapshot is ready to use.

I0214 05:30:31.652781 1 snapshot_controller.go:1086] checkandRemovePVCFinalizer[ebs-volume-snapshot]: Remove Finalizer for PVC ebs-dp-claim as it is not used by snapshots in creation

I0214 04:53:27.155927 1 main.go:169] Version: v8.2.0

I0214 04:53:27.189229 1 leaderelection.go:254] attempting to acquire leader lease kube-system/snapshot-controller-leader...

# Pod, PVC, StorageClass, VolumeSnapshot 삭제

kubectl delete pod ebs-dp-app && kubectl delete pvc ebs-snapshot-restored-claim && kubectl delete sc ebs-dp-sc && kubectl delete volumesnapshots ebs-volume-snapshot- Amazon EFS CSI Driver

# efs-csi-driver add-on 정보 확인

eksctl get addon --cluster $CLUSTER_NAME | grep efs

aws-efs-csi-driver v2.1.4-eksbuild.1 ACTIVE 0 arn:aws:iam::20....:role/AmazonEKSTFEFSCSIRole-pjh-dev-eks

# efs 설치 파드 확인

kubectl get pod -n kube-system -l "app.kubernetes.io/name=aws-efs-csi-driver,app.kubernetes.io/instance=aws-efs-csi-driver"

NAME READY STATUS RESTARTS AGE

efs-csi-controller-7d659648f-6vnck 3/3 Running 0 45m

efs-csi-controller-7d659648f-dqmzg 3/3 Running 0 45m

efs-csi-node-hsqng 3/3 Running 0 45m

efs-csi-node-llk64 3/3 Running 0 45m

efs-csi-node-pgg8w 3/3 Running 0 45m

# efs-csi-controller 컨테이너 확인

kubectl get pod -n kube-system -l app=efs-csi-controller -o jsonpath='{.items[0].spec.containers[*].name}' ; echo

--

efs-plugin csi-provisioner liveness-probe

# efs-csi-node 컨테이너 확인

kubectl get daemonset -n kube-system -l app.kubernetes.io/name=aws-efs-csi-driver -o jsonpath='{.items[0].spec.template.spec.containers[*].name}' ; echo

--

efs-plugin csi-driver-registrar liveness-probe

# EFS 파일 시스템 확인

aws efs describe-file-systems

{

"FileSystems": [

{

"OwnerId": "207748141400",

"CreationToken": "terraform-20250214044101276800000003",

"FileSystemId": "fs-066838ae02826df2d",

"FileSystemArn": "arn:aws:elasticfilesystem:ap-northeast-2:20....:file-system/fs-066838ae02826df2d",

"CreationTime": "2025-02-14T13:41:01+09:00",

"LifeCycleState": "available",

"Name": "pjh-dev-eks-efs",

"NumberOfMountTargets": 3,

"SizeInBytes": {

"Value": 6144,

"ValueInIA": 0,

"ValueInStandard": 6144,

"ValueInArchive": 0

},

"PerformanceMode": "generalPurpose",

"Encrypted": true,

"KmsKeyId": "arn:aws:kms:ap-northeast-2:20....:key/619b15fd-bf18-4692-9c1a-cc25b0b6ef8a",

"ThroughputMode": "bursting",

"Tags": [

{

"Key": "Environment",

"Value": "dev"

},

{

"Key": "Name",

"Value": "pjh-dev-eks-efs"

},

{

"Key": "Terraform",

"Value": "true"

},

{

"Key": "aws:elasticfilesystem:default-backup",

"Value": "enabled"

}

],

"FileSystemProtection": {

"ReplicationOverwriteProtection": "ENABLED"

}

}

]

}

# EFS 파일 시스템 ID 변수 선언

EFS_ID=$(aws efs describe-file-systems --query "FileSystems[?Name=='${CLUSTER_NAME}-efs'].[FileSystemId]" --output text); echo $EFS_ID

# yaml 파일 다운로드 및 확인

curl -s -O https://raw.githubusercontent.com/cloudneta/cnaeblab/master/_data/efs_dp_sc.yaml

# 파일 시스템 ID 설정 변수 치환

sed -i "s/fs-0123456/$EFS_ID/g" efs_dp_sc.yaml; cat efs_dp_sc.yaml | yh

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: efs-dp-sc

provisioner: efs.csi.aws.com

parameters:

provisioningMode: efs-ap

fileSystemId: fs-066838ae02826df2d

directoryPerms: "700"

# StorageClass 생성

kubectl apply -f efs_dp_sc.yaml

# StorageClass 확인

kubectl describe sc efs-dp-sc | yh

Name: efs-dp-sc

IsDefaultClass: No

Annotations: kubectl.kubernetes.io/last-applied-configuration={"apiVersion":"storage.k8s.io/v1","kind":"StorageClass","metadata":{"annotations":{},"name":"efs-dp-sc"},"parameters":{"directoryPerms":"700","fileSystemId":"fs-066838ae02826df2d","provisioningMode":"efs-ap"},"provisioner":"efs.csi.aws.com"}

Provisioner: efs.csi.aws.com

Parameters: directoryPerms=700,fileSystemId=fs-066838ae02826df2d,provisioningMode=efs-ap

AllowVolumeExpansion: <unset>

MountOptions: <none>

ReclaimPolicy: Delete

VolumeBindingMode: Immediate

Events: <none>

# yaml 파일 다운로드 및 확인

curl -s -O https://raw.githubusercontent.com/cloudneta/cnaeblab/master/_data/efs_dp_pvc_pod.yaml

cat efs_dp_pvc_pod.yaml | yh

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: efs-dp-claim

spec:

accessModes:

- ReadWriteMany

storageClassName: efs-dp-sc

resources:

requests:

storage: 5Gi

---

apiVersion: v1

kind: Pod

metadata:

name: efs-dp-app

spec:

containers:

- name: app

image: centos

command: ["/bin/sh"]

args: ["-c", "while true; do echo $(date -u) >> /data/out.txt; sleep 10; done"]

volumeMounts:

- name: persistent-storage

mountPath: /data

volumes:

- name: persistent-storage

persistentVolumeClaim:

claimName: efs-dp-claim

# PVC와 파드 생성

kubectl apply -f efs_dp_pvc_pod.yaml

# efs-csi-controller - provisioner log

kubectl logs -n kube-system -l app=efs-csi-controller -c csi-provisioner -f

I0214 04:53:20.633283 1 reflector.go:368] Caches populated for *v1.PersistentVolumeClaim from k8s.io/client-go@v0.31.0/tools/cache/reflector.go:243

I0214 04:53:20.642553 1 reflector.go:368] Caches populated for *v1.StorageClass from k8s.io/client-go@v0.31.0/tools/cache/reflector.go:243

I0214 04:53:20.723339 1 controller.go:824] "Starting provisioner controller" component="efs.csi.aws.com_efs-csi-controller-7d659648f-dqmzg_90e37c95-c791-4248-9e17-d36d2c0b6797"

I0214 04:53:20.723379 1 volume_store.go:98] "Starting save volume queue"

I0214 04:53:20.727869 1 reflector.go:368] Caches populated for *v1.PersistentVolume from k8s.io/client-go@v0.31.0/tools/cache/reflector.go:243

I0214 04:53:20.728410 1 reflector.go:368] Caches populated for *v1.StorageClass from k8s.io/client-go@v0.31.0/tools/cache/reflector.go:243

I0214 04:53:20.824495 1 controller.go:873] "Started provisioner controller" component="efs.csi.aws.com_efs-csi-controller-7d659648f-dqmzg_90e37c95-c791-4248-9e17-d36d2c0b6797"

I0214 05:41:45.243490 1 event.go:389] "Event occurred" object="default/efs-dp-claim" fieldPath="" kind="PersistentVolumeClaim" apiVersion="v1" type="Normal" reason="Provisioning" message="External provisioner is provisioning volume for claim \"default/efs-dp-claim\""

I0214 05:41:45.498590 1 controller.go:955] successfully created PV pvc-ad722d44-6640-4f61-b095-0e613e7fac3f for PVC efs-dp-claim and csi volume name fs-066838ae02826df2d::fsap-087741edbb54cdd14

I0214 05:41:45.516172 1 event.go:389] "Event occurred" object="default/efs-dp-claim" fieldPath="" kind="PersistentVolumeClaim" apiVersion="v1" type="Normal" reason="ProvisioningSucceeded" message="Successfully provisioned volume pvc-ad722d44-6640-4f61-b095-0e613e7fac3f"

W0214 04:53:20.742969 1 feature_gate.go:354] Setting GA feature gate Topology=true. It will be removed in a future release.

I0214 04:53:20.743086 1 feature_gate.go:387] feature gates: {map[Topology:true]}

I0214 04:53:20.743124 1 csi-provisioner.go:154] Version: v5.1.0

I0214 04:53:20.743147 1 csi-provisioner.go:177] Building kube configs for running in cluster...

I0214 04:53:20.746440 1 common.go:143] "Probing CSI driver for readiness"

I0214 04:53:20.756656 1 csi-provisioner.go:230] Detected CSI driver efs.csi.aws.com

I0214 04:53:20.758186 1 csi-provisioner.go:302] CSI driver does not support PUBLISH_UNPUBLISH_VOLUME, not watching VolumeAttachments

I0214 04:53:20.758682 1 controller.go:744] "Using saving PVs to API server in background"

I0214 04:53:20.758988 1 leaderelection.go:254] attempting to acquire leader lease kube-system/efs-csi-aws-com...

# 파드에서 마운트 대상의 디스크 사용 확인

kubectl exec -it efs-dp-app -- sh -c 'df -hT --type=nfs4'

Filesystem Type Size Used Avail Use% Mounted on

127.0.0.1:/ nfs4 8.0E 0 8.0E 0% /data

# 파드에서 out.txt 파일 내용 확인

kubectl exec efs-dp-app -- tail -f /data/out.txt

Fri Feb 14 05:42:45 UTC 2025

Fri Feb 14 05:42:55 UTC 2025

Fri Feb 14 05:43:05 UTC 2025

Fri Feb 14 05:43:15 UTC 2025

Fri Feb 14 05:43:25 UTC 2025

Fri Feb 14 05:43:35 UTC 2025

Fri Feb 14 05:43:45 UTC 2025

Fri Feb 14 05:43:55 UTC 2025

Fri Feb 14 05:44:05 UTC 2025

Fri Feb 14 05:44:15 UTC 2025

# 파드, PVC, StorageClass 삭제

kubectl delete -f efs_dp_pvc_pod.yaml && kubectl delete -f efs_dp_sc.yaml- Amazon Mountpoint S3 CSI Driver

# s3-csi-driver add-on 정보 확인

eksctl get addon --cluster $CLUSTER_NAME | grep s3

aws-mountpoint-s3-csi-driver v1.11.0-eksbuild.1 ACTIVE 0 arn:aws:iam::20....:role/AmazonEKSTFS3CSIRole-pjh-dev-eks

# Service Account 확인 (s3-csi-driver-sa)

kubectl describe sa -n kube-system s3-csi-driver-sa

Name: s3-csi-driver-sa

Namespace: kube-system

Labels: app.kubernetes.io/component=csi-driver

app.kubernetes.io/instance=aws-mountpoint-s3-csi-driver

app.kubernetes.io/managed-by=EKS

app.kubernetes.io/name=aws-mountpoint-s3-csi-driver

Annotations: eks.amazonaws.com/role-arn: arn:aws:iam::20....:role/AmazonEKSTFS3CSIRole-pjh-dev-eks

Image pull secrets: <none>

Mountable secrets: <none>

Tokens: <none>

Events: <none>

# ebs-csi-node 컨테이너 확인

kubectl get daemonset -n kube-system -l app.kubernetes.io/name=aws-mountpoint-s3-csi-driver -o jsonpath='{.items[0].spec.template.spec.containers[*].name}' ; echo

--

s3-plugin node-driver-registrar liveness-probe

# csi 구성되는 노드 확인aws-mountpoint-s3-csi-driver 구성 요소 확인

kubectl get deploy,ds -l=app.kubernetes.io/name=aws-mountpoint-s3-csi-driver -n kube-system

--

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/s3-csi-node 3 3 3 3 3 kubernetes.io/os=linux 8m50s

kubectl get pod -n kube-system -l app.kubernetes.io/component=csi-driver

NAME READY STATUS RESTARTS AGE

ebs-csi-controller-764674f9bb-cw6wr 6/6 Running 0 52m

ebs-csi-controller-764674f9bb-tp52q 6/6 Running 0 52m

ebs-csi-node-9sdl2 3/3 Running 0 52m

ebs-csi-node-ps8zk 3/3 Running 0 52m

ebs-csi-node-xkqkm 3/3 Running 0 52m

s3-csi-node-f7srz 3/3 Running 0 52m

s3-csi-node-q98sc 3/3 Running 0 52m